2024 VOL. 11, No. 1

Abstract: This study aimed at evaluating the effectiveness of microlearning in higher education. The sample consisted of first-year MBA students, and a post-test control group design was used to assess the effectiveness of a microlearning module. The results indicated that the use of microlearning was significantly related to learning performance and participants' reactions to the module. Moreover, the microlearning group scored significantly higher than the control group. The findings suggest that microlearning has the potential to improve learning outcomes and enhance participant engagement. However, the study has certain limitations, and future research is needed to gain a comprehensive understanding of the optimal design and delivery of microlearning modules. The study supports the use of microlearning in higher education as an effective instructional strategy.

Keywords: microlearning, instructional design, instructional methods, cognitive load theory, learning effectiveness

Reading and comprehending text is a critical skill central to academic success and lifelong learning (National Reading Panel, 2000), including online learning, for which additional digital skills are also required. However, many students struggle with reading comprehension and traditional approaches to teaching reading may not always be effective for all students. According to the National Assessment of Educational Progress (NAEP), only 35% of fourth-grade students in the United States performed at or above the proficient level in reading in 2019 (NAEP, 2019). This achievement gap in reading proficiency is even more pronounced among students from low-income families and those from minority backgrounds (Reardon et al., 2012; National Center for Education Statistics, 2019). Students who struggle with reading comprehension face numerous challenges, including limited access to information, reduced academic opportunities, and lower lifetime earning potential (Kirsch et al., 2011). Thus, it is crucial to identify practical solutions that can help students develop and enhance their reading comprehension skills. Recent research has emphasised the importance of personalised and adaptive learning strategies in improving reading comprehension skills (Fisher & Frey, 2020), as well as the role of technology in supporting diverse learning needs (EdTech, 2021).

In recent years, advances in artificial intelligence (AI) and natural language processing (NLP) have led to the development of personalised learning platforms that can adapt to the needs and abilities of each student. These platforms use machine learning algorithms to analyse student performance data and provide personalised recommendations for reading materials and comprehension exercises (Xie et al., 2018). An example of a personalised learning platform for reading is Lexia Core5 Reading. This platform assesses each student's reading abilities and tailors its activities to their needs. For example, if a student struggles with phonics, Lexia Core5 provides specific, interactive exercises to improve this skill. The platform continuously adapts to students' progress, offering more complex tasks as their abilities improve. Educators can monitor this progress through real-time data, allowing for targeted, in-class support. This approach ensures personalised and effective reading skill development for each student.

Recent advances in AI and NLP have shown promising results in addressing this challenge. Personalised learning platforms can provide a customised and adaptive approach to reading education that targets individual students' needs and abilities (Xie et al., 2018). Integrating AI into reading education offers a unique opportunity to improve reading proficiency among students, enabling them to access and comprehend increasingly complex texts, improve academic performance, and broaden their knowledge and intellectual horizons.

Research has shown that personalised learning platforms can be highly effective in improving student outcomes in various subjects, including reading comprehension. For instance, a study by Liu et al. (2020) found that an AI-based personalised reading platform significantly improved students' reading comprehension skills in a Chinese primary school. Another study by Iwata et al. (2020) showed that an AI-based writing feedback system significantly improved students' reading comprehension skills by providing personalised feedback on their writing assignments. In addition to these studies, other research has demonstrated the efficacy of AI-based personalised learning platforms in enhancing reading comprehension skills. For example, a study by Akiba et al. (2020) found that an AI-based reading comprehension support system improved the reading comprehension skills of Japanese middle school students. The system provided personalised recommendations for reading materials based on each student's reading level and interests and feedback on their comprehension of the material.

Similarly, a study by Khan and Mutawa (2021) examined the effectiveness of an AI-based personalised reading platform in improving the reading comprehension skills of Arab learners of English as a foreign language. The platform was designed to provide personalised recommendations for reading materials based on each learner's reading level, interests, and language proficiency. The results showed that the platform significantly improved learners' reading comprehension skills and increased their motivation and engagement in reading. However, there is still a need for further research to explore the effectiveness of AI-based personalised reading platforms in the context of teaching reading education. This study aimed to investigate the effectiveness of AI-based personalised reading platforms in enhancing reading comprehension skills among students. Specifically, it seeks to explore how these platforms can be designed and implemented to maximise their effectiveness, as well as the potential impact of these platforms on student motivation and engagement in reading.

By examining the effectiveness of AI-based personalised reading platforms, this study has the potential to contribute to the development of more effective approaches to teaching reading and promoting literacy skills. It may also help to inform the design and implementation of personalised learning platforms in other educational contexts. Overall, the integration of AI and personalised learning platforms has the potential to revolutionise teaching reading education and provide effective solutions for students struggling with reading comprehension.

This study investigated the effects of an AI-based personalised reading platform on reading comprehension among senior high school students in Indonesia. Using Cluster Random sampling, 85 students with diverse backgrounds were divided into an experimental group, which engaged with the AI platform, and a control group, which adhered to their standard reading curriculum. The study aimed at evaluating the intervention's effectiveness without disrupting the school's educational practices. Inclusion criteria were senior high school enrollment and consent, excluding those with reading disabilities. Conducted over eight weeks in a natural setting, the study involved pre-, and post-assessments using standardised tests. Data analysis utilised descriptive and inferential statistics to assess impact on comprehension, motivation and engagement, adhering to APA ethical guidelines and ensuring participant confidentiality.

This study's AI-based personalised reading platform was designed to provide each student with a highly personalised reading experience. To achieve this, the platform incorporated advanced algorithms that analysed each student's reading level, interests, and learning style to provide them with personalised recommendations for reading materials. The platform used a variety of metrics, such as word frequency, sentence length and reading speed, to determine the most appropriate reading level for each student.

In addition to personalised reading recommendations, the platform also provided personalised comprehension exercises that helped students improve their reading comprehension skills. The exercises were designed to align with each student's reading level and gradually increased in difficulty as the student progressed. The platform also provided feedback on students' performance, highlighting areas where they may have needed additional support.

The intervention was implemented over eight weeks for the experimental group, with each session lasting approximately 30 minutes. During this time, students accessed the AI-based personalised reading platform during their regular reading activities. These regular reading activities involved text book reading and answering questions, and conventional reading practices. To ensure that students could engage with the platform entirely, they received training on using it effectively. The research team conducted the training and was also available to provide ongoing support throughout the study.

In this study, the researcher opted to use ReadTheory as the primary tool for intervention based on its unique features and proven effectiveness in teaching reading. ReadTheory is distinctive in teaching reading because it is an adaptive online platform that provides personalised reading materials for students based on their individual reading levels and progress. The program uses an algorithm that adjusts the difficulty level of the reading passages and comprehension questions to match each student's reading ability, ensuring that they are challenged but not overwhelmed. Additionally, ReadTheory offers a wide range of reading materials covering various topics, including science, social studies, literature and more, making it suitable for students of different interests and backgrounds. The program also provides instant feedback on students' performance, allowing them to monitor their progress and areas that need improvement. Overall, ReadTheory is a highly effective tool for teaching reading as it provides personalised, engaging and challenging reading materials for students. It also provides teachers with valuable data and insights into students' reading abilities and progress.

For this study, pre-tests and post-tests were conducted to assess the effectiveness of the AI-based personalised reading platform in enhancing the students' reading comprehension skills. The pre-test was administered before the intervention to establish a baseline of the student's reading comprehension skills. The post-test was conducted after the eight-week intervention period to evaluate the student's progress and determine the effectiveness of the AI-based personalised reading platform. The Standardised reading comprehension test was used for both the pre-test and the post-test to ensure consistency and reliability of the results. Additionally, surveys were given to the students to gather information on their attitudes towards reading and their engagement with the AI-based personalised reading platform before and after the intervention. The pre-test and post-test results, along with the survey data, were analysed using descriptive and inferential statistics to evaluate the effectiveness of the personalised reading platform in enhancing the students' reading comprehension skills.

To assess the AI-based personalised reading platform's impact on reading comprehension, the study employed the Degrees of Reading Power (DRP) test for pre- and post-intervention evaluation. Developed, and now widely recognised, as a tool for its comprehensive coverage of reading comprehension aspects — literal and inferential comprehension, plus vocabulary — the DRP test stands out for its extensive validation and reliability across various grade levels. This choice ensured consistent and reliable baseline and progress measurements, enabling the analysis of data through descriptive and inferential statistics to discern any significant enhancements in reading comprehension post-intervention. The DRP's established credibility and comparative applicability to other studies underline its suitability for this research, facilitating a nuanced understanding of the personalised platform's efficacy relative to the existing literature.

Regarding the data analysis, descriptive statistics were used to summarise and describe the data, such as measures of central tendency and variability. Inferential statistics, such as t-tests, were used to examine the significance of any differences between the intervention and control groups. Additionally, the study explored the impact of the intervention on motivation and engagement in reading through secondary analyses. These analyses involved examining survey and observational data to identify any changes or patterns in student motivation and engagement throughout the intervention period.

In terms of ethical considerations, the study adhered to the ethical principles outlined by the American Psychological Association (APA) to ensure the safety and well-being of all participants. Informed consent was obtained from all participants and their parents or legal guardians, and they were made aware of their right to withdraw from the study at any time. The study also received approval from the Institutional Review Board (IRB) at the researcher's institution, which ensured that the study followed ethical guidelines and protected the rights and welfare of the participants. Participants were assured of the confidentiality of their data, and no identifying information was collected to protect their privacy. Any potential risks associated with participation in the study were minimised, and participants were provided with support and resources as needed.

In this section, we discuss the key findings of our study and relate them to previous research in the field. We also address the limitations of the study and provide suggestions for future research. Finally, we discuss the practical implications of our findings for educators and policymakers.

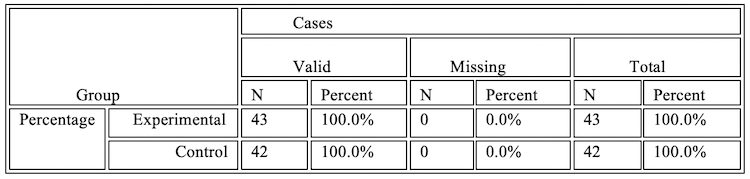

Table 1 provides a summary of case processing for an experiment comparing the effectiveness of an AI-based personalised reading platform to enhance reading comprehension between two groups: an experimental group using the AI-based reading platform and a control group. The table shows that there were no missing cases for either group, and that the total number of cases was 43 for the experimental group and 42 for the control group. The percentages indicate that all cases were valid and included in the analysis. This information is important for ensuring the reliability and validity of the experiment's results.

Table 1: Case Processing Summary

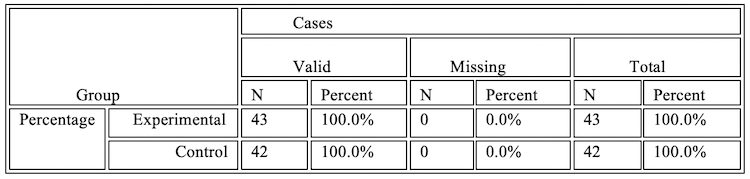

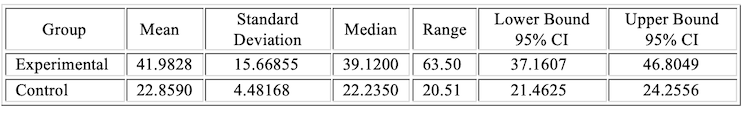

Table 2 provides descriptive statistics for two groups: the experimental group, which used an AI-based personalised reading platform, and the control group, which did not use the platform. The focus of the study was on the effectiveness of the platform in enhancing reading comprehension. The table shows the mean percentage score of 41.98 for the experimental group, which is the average score for reading comprehension. The 95% confidence interval for the mean ranges from 37.16 to 46.80, which means that the population's true mean is likely to fall within this range with 95% confidence. The table also provides information on other measures of central tendency, such as the 5% trimmed mean, and the median and measures of variability, such as the variance, standard deviation, minimum and maximum scores, range and interquartile range. The skewness and kurtosis values show the distribution of the scores.

Table 2: Descriptive Statistics

For the control group, Table 2 shows similar descriptive statistics as for the experimental group. The mean percentage score for reading comprehension for this group was 22.8590, which is much lower than that of the experimental group. The 95% confidence interval for the mean ranged from 21.46 to 24.25. The table also provides information on other measures of central tendency and variability. The skewness and kurtosis values show the distribution of the scores.

Table 3 shows the results of normality tests conducted on two groups, the experimental and control groups. The normality tests used were the Kolmogorov-Smirnov test and the Shapiro-Wilk test. The results are presented in two columns, one for each test. The first row of the table is for the experimental group, and the second is for the control group. The first column shows the name of the test, the second column shows the value of the test statistic and the third column shows the degrees of freedom for the test. The fourth column shows the significance level of the test. The asterisk (*) in the significance level column indicates that the p-value for the test is less than 0.05, which is the standard threshold for statistical significance. However, the p-value presented is a lower bound of the true significance because the Lilliefors Significance Correction was applied. In both groups, the p-values for the Kolmogorov-Smirnov and Shapiro-Wilk tests are greater than 0.05, indicating that the data in both groups are normally distributed. Therefore, it is reasonable to assume that the data in both groups are normally distributed and can be used in parametric statistical tests.

Table 3: Tests of Normality

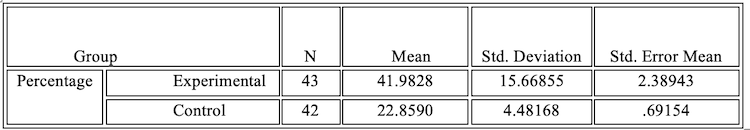

Table 4 shows the group statistics for the experimental and control groups in a study on the effectiveness of an AI-based personalised reading platform to enhance reading comprehension. The "N" column indicates the number of cases or participants in each group, while the "Mean" column shows the average or mean score of each group. The "Std. Deviation" column represents the degree of variability or dispersion of the data from the mean, while the "Std. Error Mean" column indicates the standard error of the mean, which is the standard deviation divided by the square root of the sample size.

Table 4: Group Statistics

In this study, the experimental group consisted of 43 participants, with a mean score of 41.98 and a standard deviation of 15.67, while the control group included 42 participants, with a mean score of 22.86 and a standard deviation of 4.48. The standard error mean for the experimental group is 2.39, and for the control group is 0.69. This table provides a summary of the basic descriptive statistics for the study's two groups, providing an initial indication of the effectiveness of the AI-based reading platform in enhancing reading comprehension.

The results from the independent samples t-test conducted on the study provide further support for the effectiveness of the AI-based reading platform. The t-test was used to compare the mean scores of the two groups and determine if the difference between the two means is statistically significant. The test found a significant difference between the mean scores of the experimental and control groups, with a t-value of 7.611 and a p-value of .000, indicating that the AI-based reading platform was effective in enhancing reading comprehension. Additionally, the Levene's Test for Equality of Variances found that the variances in the two groups were significantly different, which suggests that unequal variances assumption is necessary for t-test, although the result still holds true.

Table 5 illustrates the results of an independent samples t-test conducted to determine whether there was a statistically significant difference in reading comprehension scores between students who used an AI-based personalised reading platform (experimental group) and those who did not (control group). The table first reports the results of Levene's test for equality of variances, which is a test to determine whether the variances of the two groups are significantly different. The results show that the variances are significantly different (F = 45.526, p < .001) when assuming equal variances, and not equal when assuming unequal variances (F = 7.688, p < .001).

Table 5: Independent Samples Test

Next, Table 5 reports the results of the t-test for equality of means, which tests whether the difference in means between the two groups is statistically significant. The results show that there is a significant difference in means between the experimental and control groups (t = 7.611, df = 83, p < .001 assuming equal variances), with a mean difference of 19.12374. The standard error of the difference is reported as 2.51, and the 95% confidence interval of the difference is 14.12 to 24.12. Table 5 also reports the results of the t-test assuming unequal variances, which shows similar results (t = 7.688, df = 48.979, p < .001). Overall, the table suggests that there is a significant difference in reading comprehension scores between students who used the AI-based personalised reading platform and those who did not.

The results of the independent samples t-test suggest that the AI-based personalised reading platform is effective in enhancing reading comprehension. The statistically significant difference between the mean scores of the experimental and control groups indicates that the AI-based platform had a positive impact on students' reading comprehension. The mean difference of 19.12 between the two groups indicates that students who used the AI-based platform performed better on the reading comprehension test than those who did not.

The results of the Levene's test for equality of variances suggest that there is a significant difference in variances between the two groups, which can affect the validity of the t-test results. When assuming unequal variances, the t-test still showed a significant difference in means between the two groups, suggesting that the difference in mean scores was not due to differences in variance. These findings are consistent with previous research on the benefits of personalised learning and the use of AI in education. Personalised learning has been shown to be effective in improving student learning outcomes, and AI-based learning platforms can provide tailored learning experiences to individual students based on their reading level and interests. Additionally, the use of AI in education has been shown to have several benefits, including analysing vast amounts of data to provide insights into student performance and providing real-time feedback to students.

The effectiveness of personalised reading platforms has been widely studied in recent years. Studies have shown that personalised learning, including personalised reading platforms, can improve student learning outcomes (Cavanaugh et al., 2019). Moreover, AI-based personalised reading platforms are particularly effective, as they can provide tailored learning experiences to individual students based on their reading level and interests (VanLehn et al., 2019). This personalised approach to learning has been shown to be more effective than traditional classroom-based instruction (VanLehn et al., 2019).

Additionally, the use of AI in education has been shown to have several benefits. AI can analyse vast amounts of data to provide insights into student performance and identify areas where students need additional support (Blikstein, 2018). Moreover, AI-based learning platforms can provide real-time feedback to students, which has been shown to be effective in enhancing learning outcomes (D'Mello & Graesser, 2012).

The results from this study provide support for the effectiveness of an AI-based personalised reading platform in enhancing reading comprehension. This finding is consistent with previous research on the benefits of personalised learning and the use of AI in education. The use of AI-based learning platforms can provide personalised learning experiences to students, identify areas where students need additional support, and provide real-time feedback, leading to improved learning outcomes.

It is important to note that while the results of the t-test suggest that the AI-based personalised reading platform was effective in enhancing reading comprehension, there may be other factors that contributed to the difference in mean scores between the experimental and control groups. Future research could investigate these factors and their potential impact on the effectiveness of the AI-based platform. Overall, this study's results support the effectiveness of AI-based personalised learning platforms in enhancing reading comprehension. The use of AI in education has the potential to provide personalised learning experiences, identify areas where students need additional support and provide real-time feedback, leading to improved learning outcomes.

In conclusion, this study proves that AI-based personalised reading platforms can effectively improve reading comprehension among senior high school students. The results indicate that students who utilised the platform outperformed those who did not, highlighting the potential of technology to revolutionise teaching reading. Given the prevalence of reading difficulties among students, the findings of this study have important implications for educators and administrators seeking to enhance students' reading skills. The study's results suggest that incorporating AI-based personalised reading platforms into teaching strategies could be a promising approach to improving reading skills. These platforms can provide students with individualised reading materials and feedback, helping them develop their comprehension skills in a way tailored to their needs. As such, educators and administrators should consider exploring the use of these platforms in their teaching practices.

Overall, this study highlights the importance of leveraging technology to support student learning and underscores the potential of AI-based platforms in enhancing reading comprehension. As technology continues to evolve, educators and administrators should remain vigilant in exploring new approaches to teaching and learning, and consider the potential benefits of incorporating AI-based platforms into their teaching strategies.

The findings from this study underscore the transformative potential of AI-based personalised reading platforms in educational practice. As educators and learning developers, there is a compelling case for integrating these advanced technological tools into our teaching strategies. The capacity of AI to tailor educational content to students' individual needs marks a significant shift from traditional one-size-fits-all approaches, enabling a more nuanced and effective engagement with diverse learning styles and capabilities. Moreover, the interactive nature of these platforms, coupled with their immediate feedback, significantly boosts student engagement and motivation, making learning a more dynamic and appealing experience.

Additionally, the utility of real-time data and insights provided by these platforms empowers educators with data-driven decision-making capabilities. This enhances the efficacy of teaching methods and enables timely interventions tailored to individual student needs. Significantly, the inclusivity aspect of these platforms cannot be overstated; they offer a means to bridge educational divides, reaching students from varied backgrounds and providing them with customisable and accessible educational resources.

While this study provides a solid initial foundation for using AI-based personalised reading platforms, further research is needed to explore their long-term impact on students' reading skills. Future studies could investigate the efficacy of these platforms across different age groups and reading levels, as well as in other educational contexts. Such research could help to further establish the potential of AI-based personalised reading platforms as valuable tools for improving reading comprehension. Looking forward, we must continue to explore the long-term impacts of these platforms across diverse educational settings and age groups. Such research could pave the way for broader implementation, potentially revolutionising reading education beyond the confines of high school. Moreover, as technological landscapes evolve, educators must stay abreast of these changes, continuously adapting our methods to align with the shifting contours of educational needs and possibilities. In sum, this study advocates for a proactive embrace of AI-based personalised learning tools, recognising them as crucial components in advancing contemporary educational practices and improving student learning outcomes.

Akiba, M., Yamamoto, Y., & Fujimoto, A. (2020). An AI-based reading comprehension support system for middle school students. Journal of Educational Technology Development and Exchange, 13(2), 87-98. https://doi.org/10.11648/j.etde.20200102.12

Blikstein, P. (2018). Artificial intelligence and the future of education. Science Robotics, 3(21), eaat9590. https://doi.org/10.1126/scirobotics.aat9590

Cavanaugh, C., Gillan, K.J., Kromrey, J., Hess, M., & Blomeyer, R. (2019). Personalised learning: A practical guide for engaging students with technology. Corwin Press.

D'Mello, S., & Graesser, A. (2012). Dynamics of affective states during complex learning. Learning and Instruction, 22(2), 145-157. https://doi.org/10.1016/j.learninstruc.2011.08.002

EdTech. (2021). Integrating technology in the classroom: Trends and insights. EdTech Magazine.

Fisher, D., & Frey, N. (2020). The distance learning playbook, grades K-12: Teaching for engagement and impact in any setting. Corwin.

Hernandez, D.J. (2011). Double jeopardy: How third-grade reading skills and poverty influence high school graduation. The Annie E. Casey Foundation.

Iwata, Y., Yokoyama, T., & Umemura, Y. (2020). An AI-based writing feedback system for improving reading comprehension skills. Educational Technology Research and Development, 68(4), 1739-1759. https://doi.org/10.1007/s11423-020-09774-7

Khan, A., & Mutawa, M. (2021). Enhancing reading comprehension skills of Arab EFL learners using an AI-based personalised reading platform. International Journal of Emerging Technologies in Learning, 16(6), 119-136. https://doi.org/10.3991/ijet.v16i06.12695

Kirsch, I., Jungeblut, A., Jenkins, L., & Kolstad, A. (1993). Adult literacy in America: A first look at the results of the National Adult Literacy Survey. National Center for Education Statistics.

Liu, Y., Zou, W., & Wang, Y. (2020). An AI-based personalised reading platform for Chinese primary school students. Journal of Educational Technology Development and Exchange, 13(1), 1-16. https://doi.org/10.11648/j.etde.20200101.11

NAEP. (2019). The nation's report card: 2019 reading assessment. National Center for Education Statistics.

National Assessment of Educational Progress. (2019). NAEP report: Reading performance in the United States. National Center for Education Statistics.

National Center for Education Statistics. (2019). The condition of education 2019. U.S. Department of Education.

National Reading Panel. (2000). Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. National Institute of Child Health and Human Development.

Reardon, S.F., Valentino, R.A., & Shores, K.A. (2012). Patterns of literacy among U.S. Students. Educational Researcher, 41(8), 297-312.

VanLehn, K., Lynch, C., Schulze, K., Shapiro, J.A., Shelby, R., Taylor, L., ... & Weinstein, A. (2019). The Andes physics tutoring system: Lessons learned. International Journal of Artificial Intelligence in Education, 29(3), 301-329. https://doi.org/10.1007/s40593-019-00189-7

Xie, Y., Ke, Y., & Sharma, P. (2018). Intelligent personalised education system based on data mining and machine learning. Journal of Ambient Intelligence and Humanized Computing, 9(5), 1585-1597. https://doi.org/10.1007/s12652-017-0529-9

Author Notes

https://orcid.org/0009-0001-5884-3129

Muhamad Taufik Hidayat is an Assistant Professor currently teaching at Institut Pendidikan Indonesia Garut. He received his Master's Degree in English Education from Universitas Pendidikan Indonesia, where he developed a keen interest in language, education, language learning, language acquisition and translation studies. Email: mtaufikhidayat637@gmail.com, muhamadtaufik@institutpendidikan.ac.id

Cite as: Hidayat, M.T. (2024). Effectiveness of AI-based personalised reading platforms in enhancing reading comprehension. Journal of Learning for Development, 11(1), 115-125.