2023 VOL. 10, No. 2

Abstract: This article explores student perceptions of writing online examinations for the first time during the Covid-19 pandemic. Prior to the pandemic, examinations at an open and distance learning institution in South Africa were conducted as venue-based examinations. From March 2020, all examinations were moved online. Online examinations were introduced as an emergency measure to adhere to safety and health protocols. Although students in developed countries have indicated benefits to online examinations, less is known about students living in the Global South when it comes to writing examinations online. Not enough is known about the benefits and challenges of online examinations since they were implemented as an emergency measure. We aimed at exploring student perceptions of writing online examinations for the first time, improve examination processes by including student views. Through an analysis of 336 written responses to an open-ended question posed at the end of an online survey, we established that digital access, duration of the examination, and the examination system interface affected students’ success in online examinations. Based on the findings, we recommend that students need to be given tools and data to participate in online examinations. Furthermore, students should be granted ample opportunity to practise writing online examinations while receiving the necessary support.

Keywords: online examinations, student perceptions, digital divide, open distance learning, Covid-19.

In recent history, the most significant disruption to education was brought about by the Covid-19 pandemic (Radu et al., 2020). Social distancing measures necessitated by the pandemic required a rapid escalation to online examinations to keep people safe and to meet assessment objectives (Khan, et al., 2021; Reedy, et al., 2021; UNESCO, 2020). While there were associated cost and administrative benefits for institutions (Reedy et al., 2021), several challenges emanated from online examinations. The speed at which the change to online examinations happened took university administrators, academics, and students by surprise. Several studies (but not exhaustive) have been conducted based on this emergency mode of examinations. Most studies focused on quantitative data, while only a limited number of the studies featured Africa or South Africa. Our research focused on more qualitative aspects of student perceptions of online examinations and was set in South Africa, involving 336 participants.

A need for studies from developing countries has been noted (Afacan Adanir et al., 2020), while we are also writing to address the gap in what is known about pandemic-driven online examinations (Bishnoi & Suraj, 2020). Students' perceptions of online examinations in developed countries are positive (Butler-Henderson & Crawford, 2020), but perceptions in developing countries are still unclear. The South African higher education landscape is complex, known to be under-resourced, and caters to students from diverse social and economic backgrounds (Ngqondi, Maoneke & Mauwa, 2021), leading to inequalities within this space. Bishnoi and Suraj (2020) affirm that students from poorer backgrounds are disadvantaged by online examinations. This was confirmed by Rahim (2020), who identified student diversity (including their access to the internet) as one of the nine factors to consider when designing online assessments. However, design implies a thoughtful process, while the move to online examinations in 2020 was rushed.

The context of this research is a mega open distance learning university in South Africa. Prior to Covid-19, many courses were offered in a correspondence mode (postal), with support and resources offered online for those students who could access the learning management system (LMS). Students could post or upload formative assessments onto the university's learning management system. Summative assessment was either conducted via a venue-based examination (more common) or by submitting a portfolio. In keeping with safety protocols, students were compelled to submit formative assessments online from early 2020. In addition, traditional venue-based examinations moved to online examinations. We were interested in investigating how students experienced this change in examination format to respond to the identified paucity in the literature. This research is significant because it provides a more holistic picture of student perceptions when a sudden change is made to an examination format. The current literature presents the digital divide in terms of access to physical devices or connectivity. At the same time, we explore the broader implications of the digital divide to include mental and usage access.

The primary research question was: What are student perceptions of writing online examinations as an emergency measure during the Covid-19 pandemic in an ODL institution?

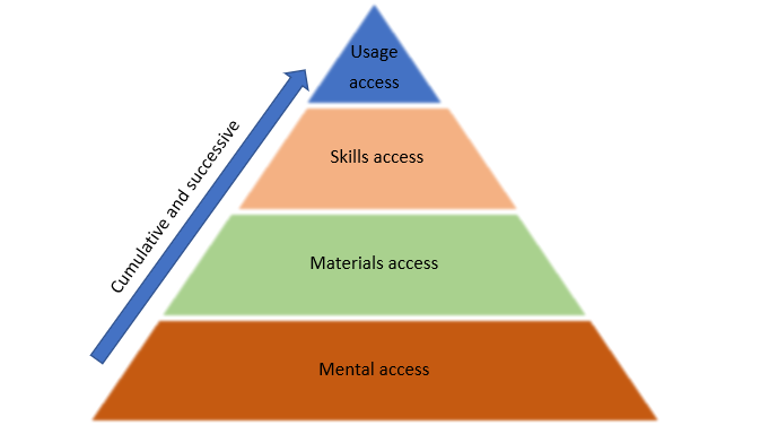

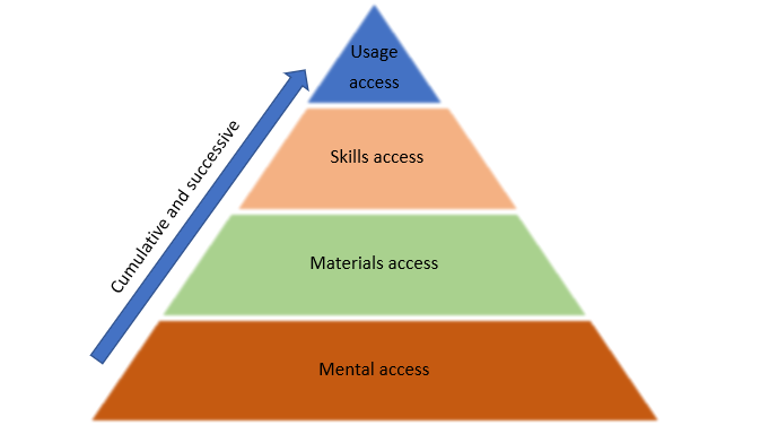

South Africa has been described as one of the most unequal countries in the world (Sulla et al., 2022). As such, the digital divide is a concept that is particularly relevant to this article. It denotes a split between groups of people who have access to technology and those who do not (van Dijk, 2002; 2017). However, van Dijk identifies four important stages of access, namely mental access, materials access, skills access, and usage access, all bearing on this study. Mental access refers to computer anxiety. In this research, students used technology for the first time to write online examinations, which undoubtedly caused some anxiety. Materials access refers to the tools, data and connectivity needed to participate in an online examination. Not all students in South Africa have access to digital devices for learning, such as desktop computers, tablets, and laptops. Skills access refers to the digital competencies to navigate through various platforms and perform tasks such as downloading and uploading an online examination. Usage access refers to how students have previously had the opportunity to use technology and may have particular importance in the context of this research. While students may be using devices and the internet for other purposes (socialising, communicating, etc.), they have not used their devices or connectivity for writing online examinations.

Van Dijk (2002) further theorises that the four stages of digital access are successive and cumulative. Usage is the fourth of these stages and presupposes that mental, materials and skills access are in place. However, students in this research study had to move onto an online examination mode as a matter of emergency, and, therefore, the three earlier stages may not be sufficiently developed to ensure a smooth transition to the fourth stage, that of usage access.

These four stages of technology access can be presented as follows (Figure 1):

Figure 1: Authors’ figure based on four stages of technology access

according to van Dijk (2002).

The digital divide has more grave consequences than inconvenience and perpetuates existing societal inequalities (Bozkurt & Sharma, 2020). Although the digital divide has long existed, the Covid-19 pandemic has entrenched it and may create a greater gulf between those who have mental, materials, skills, and usage access issues and those who do not. Distance education has made access to higher education easier and more equitable (Houlden & Veletsianos, 2019). However, with distance education moving exclusively online during the pandemic, it may now be exclusionary when digital access is not the same for all students (Lembani et al., 2020).

Lembani et al. (2020) set out that the digital divide is not only about access but includes more complex issues, such as lack of cognitive and social resources. Significantly, their study found that more than double the number of students living in urban areas had home access to computers and the internet compared to those living in rural areas. While their study proposed that there are different levels of access across, for example, the rural-urban divide, they were able to consider public internet spaces and regional centres as access spaces for students. In the context of this research article, these spaces were unavailable to students due to lockdown measures.

It can be surmised that by moving examinations from venue-based to entirely online, students in lower socio-economic sectors of society would be disadvantaged, since digital devices (laptops), access to an internet connection and the cost of data are not equitable across all students. The digital divide as a conceptual framework is, therefore, a pertinent lens for exploring students’ perceptions of online examinations in this context and our research question is located within this conceptual framework, as we sought to understand online examinations through that lens.

In the next section, we explore the literature relevant to online exams: digital access, duration of the examination, and the examination system interface.

Khare and Lam (2008, p. 391) suggested that online examinations "make sense for students who already have access to computers and network connections". For example, Butler-Henderson and Crawford’s (2020) systematic review of research in mainly developed countries found that only one study indicated technological problems due to slow system response. This may be different in South Africa where connectivity is inconsistent and one of the most expensive in the world (Chinembiri, 2020). Most universities in South Africa decided to move examinations online due to the "extraordinary circumstances of 2020" (Reedy et al., 2021, p. 1), and network connectivity, or the lack thereof, was not the determining factor.

Lack of access to necessary devices was one of the biggest challenges students experienced during the pandemic (Bashir et al., 2021). These authors also report that while students could access devices at the university library or in their departments before the pandemic, many only had smartphones and struggled to complete their online examinations on these devices. This study also reported that unstable internet connections and the cost of data contributed to the financial burden of the students, who were mainly from middle and lower-middle-income families (Bashir et al., 2021).

Digital access challenges related to data costs, devices and internet connectivity are significant in online examination participation and success. However, when students manage to access the online examination, other factors influence their success, such as the time they have to complete and submit their examination responses and the user-friendliness of the examination interface. We now turn to these.

The literature does not agree on the ideal time allocation for online examinations. Reedy et al.'s (2021) study found that students complained about a lack of time, while others experienced having too much time to complete online examinations. Genis-Gruber and Weisz’s (2022) study presented findings that the duration for online examinations ranged from 4 to 24 hours because contingency measures needed to be considered. At the institution involved in this research, traditional venue-based examinations ranged from 2 to 3 hours, while online examinations ranged from 4 to 72 hours.

Thomas and Cassady (2021) referred to insufficient time for the examination (including loss of time due to slow typing), inadequate orientation to the examination system, gaining access to the examination, uploading of the examination, and periodically saving answers. More examination time is a need reported by a large portion of students (Khan & Khan, 2019; Ilgaz & Afacan Adanır et al., 2020). A small part of the faculty acknowledges time issues on behalf of students (Reedy et al., 2021). Faculty, in general, seem to pay great attention to the duration of online examinations, but only in considering it as a factor that can enable or minimise cheating behaviours (Ng, 2020; Reedy et al., 2021; Munoz & Mackay, 2019).

Students in Reedy et al.’s (2021) study, that cited too much time, indicated that they were confused by having ample time because they then assumed they should reference their work as they would in an assignment. The change from 'writing' to 'typing' answers may also be a factor in a student's need for more time, especially if they are not skilled typists, as was cited by Hillier (2014).

Munoz & Mackay (2019) found that insufficient time may leave students feeling helpless, and this may lead to cheating. Khare and Lam's (2008) suggestion is to have online examinations take place over a few days and not hours since this would make it difficult to find an outsider to write the examination on their behalf. However, they recommend that online examinations be made up of case studies and higher-order questions.

The duration of an online examination is a complex issue and follows a Goldilocks Principle, where either too much time or too little time has its challenges. Irrespective of the suitability of the time frame for the examination, the management of the submissions process through the examination system interface is another matter we raise here.

If the system is not intuitive, the interface of the university examination system itself can be either enabling or disenabling for students when they write an online examination, according to Butler-Henderson and Crawford (2020). While their systematic review found that font sizes, colours and timers were studied in the literature, the ease of use of the examination system itself may need further investigation. Furthermore, systems may become more ‘intuitive’ the more students use them (i.e., usage access). Student ability to navigate effectively the online examination site speaks to its usability (Alshira’h et al, 2021), which relates to intangibles such as ease and effectiveness. However, it speaks specifically to the system's simplicity, the speed of finding content and the ease of navigation (Alshira’h et al., 2021). Muzaffar, et al. (2021) identified the usability of the examination system as a leading attribute when conducting their systematic review of online examination solutions. However, it was the least researched idea in the last five years. These authors concluded that good usability indicates that the system is easy and intuitive to use and should not require additional training.

For Alshira’h et al. (2021), e-learning platforms must be accessible and easy to use to succeed. Fawaz and Samaha (2020) confirmed that the sudden move to online learning led to anxiety in many students. Furthermore, they reiterate that students who were not accustomed to online learning experienced intense emotional discomfort. Navigating the online examination space may cause anxiety in students, especially when the interface is being used for the first time or is not easy to use. Anxiety is the first consideration in the digital divide (van Dijk, 2002, 2017) and may exacerbate materials, competence, or usage access issues.

The research methodology was qualitative, even though the data came from a questionnaire. The last question in an online survey sent to students was open-ended, and the written responses comprised the data set for this study. Typically, open-ended questions require qualitative methods to code and facilitate understanding (Feng & Behar-Horenstein, 2019).

We surveyed Bachelor of Education (BEd) students and Post Graduate Certificate in Education (PGCE) students. We invited all students enrolled in the BEd and PGCE to participate in the survey via a link sent to their university email. A total of 43,331 students received the link to the questionnaire. Students were given one month to respond to the survey and a follow-up reminder was sent after two weeks. Only 2,858 students responded to the quantitative part, while 1,899 responded to the open-ended question, which denotes the qualitative part. Our dataset reflects a 4.4% response rate to the open-ended question. In general, survey response rates are notoriously low (Cohen et al., 2018).

This research used an open-ended question as part of a more extensive study on students’ perceptions of moving teaching, learning and assessment entirely online during Covid-19. The complete questionnaire comprised two parts, a quantitative section with Likert-type questions and a section with a concluding open-ended question. Due to the large data set, we only focused on the qualitative responses to the open-ended question. In terms of content validity, the open-ended question is relevant and representative of the construct or topic of interest, i.e., online examinations, since the respondents decided to focus their answer on an aspect of the online examinations. We clarified the concept “online examination” by reviewing the literature. We also clarified and evaluated the responses both individually and collectively to ensure that the responses were relevant to the construct.

The open-ended question, “From your experience, please share any recommendations for learning during future disruptions”, was not a compulsory part of the questionnaire. We were looking for responses from students who wanted to share their experiences. This would avoid forced or superficial answers to the open-ended question. The responses were screened for the word “exam”, which would include any responses that used “exam,” “exams,” “examination,” or “examinations.” A total of 336 responses using this filter was found.

The responses that contained the word “exam” were analysed by the three authors. We coded individually and then coded together to ensure the consistency of the coded data. We used colour coding to annotate the data and then created categories and themes. In addition, we allowed for open coding to capture any data that represented new themes.

Ethical clearance and permission to conduct the research was given by the ethics committee of the participating institution (Ref: 2020/08/12/90159772/19/AM). No names of students nor e-mail addresses were collected in the survey — it was entirely anonymous.

The following limitations in the study were noted. Firstly, the low response rate of students to the survey. Secondly, only students registered for two qualifications in Education were sampled.

The six-phase thematic analysis of Braun and Clarke (2006) was used to analyse the data. These authors define thematic analysis as a flexible method to systematically identify and organise data into patterns of meaning (Braun & Clarke, 2012). The six phases we followed were: 1) to familiarise ourselves with the data, 2) to generate initial codes, 3) to search for themes, 4) to review them, 5) to define and name them, and 6) lastly, to write the report. In our case, we worked deductively by looking for responses that contained the word “exam” and analysed them. Braun and Clarke ( 2012) state that by following a deductive approach, researchers bring to the data certain concepts, ideas, or topics that they use for coding and interpreting the data. A total of 336 students out of 1,899 chose to respond to the open-ended question about an issue related to online examinations, even though we did not ask a specific question about examinations.

Three themes predominated the data we analysed. Students frequently mentioned that they ran out of time when writing the examination; they were not equipped with suitable devices, data, and connectivity, which we called “digital access”, and they struggled with the examination system itself, which we called the “usability of the examination system interface.” We now present the main findings.

A total of 69 out of the 336 responses that included the word “exam” related to time or duration of the online examination.

This theme revealed that several students referred to the duration of the examination as insufficient for them to complete the examination. Insufficient time was caused by challenges in navigating the examinations system and network challenges when writing their examinations, leading to non-submission of their examination papers. Their comments also reflected other technical challenges, such as using equation editors. The comments do not reflect a problem in answering the actual questions about the module content during the given time. The respondents suggested that:

The time given to write the exam was not enough since some of us were not good at using computers. We struggle using Microsoft office, and writing exam equations become more time-consuming.

We need more time when writing our exams...because we experience more problems

Many students were used to writing exams only at a physical venue and not used to writing online examinations. Some students mentioned that typing (instead of writing) also increased the time needed for the examination simply because they typed slowly, unlike when they write (similar to Hillier, 2014):

The university must increase the time for online examinations, typing, and submitting scripts because this is a lot of work.

One student indicated that the written questions were more time-consuming than the multiple-choice questions:

[we needed] enough time during exams, especially on written exams with no multiple-choice questions.

While multiple-choice examinations are helpful in marking efficiency and randomised question banks can mitigate against cheating, there are times when a written response is more suitable to the content of the course. This may be the case in many education courses, where students must bridge the divide between theory and practice.

Similarly, most students also referred to needing more time to upload their exam because of network delays and what they considered to be problems with the submission system:

I want to ask the university to look into the time of online exams as it is little and find other options to submit online examinations. At times, the system does not allow us to submit exams without any form of difficulty, and that caused us to submit late or not be able to submit at all.

The time constraints experienced by students seemed to stem from lack of typing as well as network and system problems. Institutional readiness is essential to ensure sufficient time is allocated to an online examination that considers these aspects. Since this might be an ongoing process, institutional readiness should include policies, resources, and practices (Rahim, 2020), to create consistency in terms of examination duration across all faculties and subjects, while also accommodating external contingencies (Khan et al., 2021; Reedy et al.; 2021; UNESCO, 2020). Time allocation for online examinations should consider the time needed for typing, connectivity, digital competence in uploading a document, and problems with the system itself. Considerations other than limiting the duration of an online examination to prevent cheating should be considered. Students may feel added anxiety (mental access) when writing online examinations if they have time constraints that affect their ability to answer questions and submit their answers.

A total of 71 responses related to digital access during the examinations. This included comments about devices, data, or network connections.

Contributing to time challenges was unreliable internet connectivity. When the internet connection was interrupted, students had difficulties completing their exams on time. Apart from losing connectivity and having to log into their examinations again, others had challenges because of slow internet connections. The challenge of having a stable internet connection during online examinations is not unique to South Africa, and has, for example, been confirmed by research in Romania by Radu et al. (2020) and Shraim (2019) in Australia.

As an example of internet challenges, a student said:

... at times, the internet was very slow when I was trying to download or upload my examination script.

During times when the internet was too slow, the connection was interrupted and students could not proceed, confirming the finding in a study by Radu et al. (2020), where students cited internet connectivity as the most problematic area in completing their online examinations.

From students' responses, it also seemed as if internet interruption was not a one-off occurrence. A student stated:

I experienced problems with multiple-choice question exams. When the internet connection was interrupted, as it often does, we had to log back into the exam and start from the beginning. Some students did not have enough time to restart from the beginning.

When students experienced challenges with an unstable internet connection, it was not always clear where the problem was. One possibility is that the system at the university was slow due to high student numbers writing the online examinations in the same time slot, thus overloading the system, or it could have been a slow internet connection on the side of the student. In this regard, a student made the following request to the university:

Ensure that students [are] not struggling to connect to the institutional internet connection while we are writing exams.

Power outages contributed to the general problem of internet connection. Below are two examples of students referring to issues related to electricity and connectivity:

What if there is load shedding [the practice in South Africa of rotational electricity supply when demand exceeds capacity] during the writing of an online examination?

It is difficult because sometimes we have electricity and signal problems during the examination.

Related to the access to connectivity was access to data and tools, which van Dijk (2002) refers to as materials access. Some students indicated that they did not have the necessary tools and data to write online examinations and requested these to write their online examinations. For example, a student said:

Provide all students with laptops and data bundles during examinations, not only the bursary students, it is unfair towards the rest of us[who do not receive laptops and data].

Although all students had sufficient data, because they received data bundles from the university during examination periods, only students who had bursaries from the national bursary fund received laptops (van den Berg, 2021). Students were not dependent on laptops before the pandemic for their examination writing since the latter were venue-based. Therefore, students who did not own laptops and the necessary data bundle had serious challenges writing online examinations. Comparable results on the lack of the needed devices, internet access and data costs were reported in research by Bashir et al., (2021) in Bangladesh. The authors noted that, before the pandemic, students went to venues at the university to access devices.

In this regard, a student in this research noted:

Please ensure that all learners have the right equipment to make online learning more accessible and successful. Provide students with monthly data, not only during exams.

Another added:

The university must stop online examinations because some students do not have smartphones or laptops, while some cannot finish writing their exams due to network problems.

The comment above is in line with the argument of Khare and Lam (2008), who state that online examinations only work for students who have access to computers and network connections. Radu et al. (2020) reiterated that students who lack suitable tools and access to stable connectivity would face the most challenges in fully online learning. In addition, existing injustices are perpetuated when students do not have functional tools, data, or digital skills (Govindarajan & Srivastava, 2020). Iivari et al. (2020) indicate that various digital divides exist and were highlighted by the pandemic (e.g., resources, skills, and access).

A total of 97 comments were about challenges related to the online examination system.

It is important to note that the issues highlighted in this article were interrelated. Unstable internet connectivity affects students' engagement with and perception of the examination system interface. When students experience challenges downloading or uploading their examination scripts or answering multiple-choice questions online, it may be related to their device, the internet connection, or the interface itself. While system access and connectivity are two issues, they are related when students report their challenges with online examinations. While students may indicate a system problem, it could be due to operating systems and personal or workplace firewalls (Butler-Henderson & Crawford, 2020). Internet connectivity also affects how systems respond. For Khan and Khan (2019), how the change from traditional examinations to online examinations is facilitated is one of the most critical elements to accepting online assessments from a student perspective.

We received the following statement referring to the interface itself:

Try to make the system more straightforward during the examination and minimise having different options for writing online exams per module.

Some courses used multiple-choice examinations, while others required written responses to the examinations. This meant that students might have had to learn how to navigate both of these platforms. To help students locate the examination papers, they were published on two systems, and extra links were provided for uploading purposes; however, this may have confused the students further, as indicated in this example:

I want to ask the university exam team not to change how we download and upload documents.

Students felt that an orientation session would make them more comfortable with online examinations. This can be seen as an example where students understand that usage access would mitigate the new online examination situation. Ideally, a slower transition would have assisted students in familiarity with the interface, but this was not possible in the context of pandemic-driven educational decisions:

I recommend that students be orientated with online examinations. Also, the system is so complicated as it took me four whole months trying to learn and understand it.

For some students, the access to or navigation of the interface proved too much, and their preference would be to write traditional examinations in what can be seen as “digital exclusion” (Coleman, 2021 p. 3):

I would like an opportunity to write my exams in a hall because it is difficult for some of us to write exams online. Last year I failed twice to submit my exams.

What is evident is that “the problems of digital access for all has still not been solved, and this was worsened by the pandemic crisis” (Alvarez Jr, 2021, p. 26).

A further complication was the invigilation systems used by the institution to uphold the academic integrity of online examinations, which many students found difficult:

The university must come up with another invigilation tool for the exams because Iris is complicated, and I was unable to use it.

The invigilation system meant that students required two devices to write their online examinations, and students had to navigate both systems to ensure a successful examination sitting. The university did offer online workshops to mediate the new examination system and proctoring tools.

Not all students attended these, however, students who did attend reported positive outcomes from attending the sessions:

The online classes really helped a lot with examination preparation.

However, data-conscious students may have chosen not to attend these sessions to ensure they had data for their examinations. Although the same learning management system as the teaching space was used for the examinations, the university rolled out orientation sessions to assist students with what van Dijk (2002; 2017) termed “mental access”, i.e. anxiety. While students welcomed the examination preparation session, it does imply that either the system requires added training, in which case it may not be user friendly, or that the students were not engaging with the LMS for learning purposes and were therefore not comfortable in navigating the system. This may refer to usage access as a barrier to successful examinations. It is, therefore, possible that usage access affects mental access and skills access. If that is the case, then van Dijk’s ideas that the four access points are successive (moving from mental access to materials access to skills access and then, finally, to usage access) and cumulative may not be fully formulated. We may need to consider the interdependency of the four access points with each other.

The purpose of this research was to explore student perceptions about writing online examinations for the first time as an emergency measure brought about by the Covid-19 pandemic. According to Broom (2020), 46% of the global population (mainly in low-income countries), live entirely offline. Therefore, sweeping decisions to move all examinations online can only be justified by serious safety concerns. Digital exclusion (Coleman, 2021) in all its forms was exacerbated by the pandemic (Alvarez Jr, 2021). However, examinations had to continue to allow students to progress with their studies.

Our findings highlighted three areas of concern for students: insufficient time, digital access, and system interface problems. Considering that many students did not have enough time to complete their online examinations, more research is needed to allocate the correct time frames to online examinations. Traditional time allocations for sit-down examinations need to be revised when setting online examinations. In terms of digital access, students need stable internet connections, which also affects the time necessary to complete their examination. In addition, students require tools and data to be successful at online examinations. For examination system interfaces, students need to be able to navigate the interface intuitively, based on their previous learning on the LMS. System interfaces where students need extensive training may not be suitable, considering the anxiety inherent in examinations.

Our suggestions to leaders in higher education would be the following:

The themes of digital connectivity, duration of the examination and system interface occurred throughout the dataset. In exploring the digital divide in the dataset, we found numerous examples of issues with mental access (anxiety), materials access (devices, data, and connectivity), competency access (skills to use technology) and usage access (students not accustomed to using technology for online examinations). The three themes we discussed provide important insights into usage access. We take up the issue noted by Lembani et al. (2020) that social access played a factor for the students in our research. Students could not rely on their peers to assist them since all students were writing online examinations for the first time. They could not depend on each other for previous knowledge or experience in writing online examinations. We, therefore, propose that usage access in the digital divide be broadened to include a communal social usage aspect.

The findings also suggest that the digital divide concepts may need to include other access avenues such as ‘support access’ – like peer learning, tele-support centres, Twitter hashtags or videos that students could watch to become more familiar with online examinations. Furthermore, support access would require universities to consider ‘practice’ online examinations for students to feel comfortable with the online examination system interface. Since the pandemic brought about the move to fully online examinations in many contexts, this field is only in its infancy and requires further research. We also suggest that usage access may instil skills access; we, therefore, suggest further theoretical and empirical work be done on the digital divide, especially the cumulative and success aspects.

The study's limitations are that only one college at one university was used for data collection. Since all colleges within the university and all institutions are unique, the results may differ if conducted at other colleges and institutions. Using a digital survey is also a limitation since many students may experience a digital divide and not always have access to tools or data to participate in the study. However, the number of responses and the themes generated shed light on student experiences of online examinations during Covid-19.

Considering the limitations, we recommend further studies on how student experiences can enhance the use of online examinations. We also recommend studies of a more quantitative nature, or mixed-method studies, to focus more specifically on online examinations, since they will remain a resident of the distance learning landscape.

Afacan Adanır, G., İsmailova, R., Omuraliev , A., & Muhametjanova, G. (2020). Learners’ perceptions of online exams: A comparative study in Turkey and Kyrgyzstan. The International Review of Research in Open and Distributed Learning, 21(3), 1-17.

https://doi.org/10.19173/irrodl.v21i3.4679

Alshira’h, M., Al-Omari, M., & Igried, B. (2020). Usability evaluation of learning management systems (LMS) based on user experience. Turkish Journal of Computer and Mathematics Education, 12(11), 6431-6441.

https://www.turcomat.org/index.php/turkbilmat/article/view/7031/5737

Alvarez Jr, A.V. (2021). Rethinking the digital divide in the time of crisis. Globus Journal of Progressive Education, 11(1), 26-28. https://doi.org/10.46360/globus.edu.220211006

Bashir, A., Uddin, M.E., Basu, B.L., Khan, R., Hardiyanti, D., Nugraheni, Y., Nababan, M., Santosa, R., Gozali, I., Lie, A., & Tamah, S.M. (2021). Transitioning to online education in English Departments in Bangladesh: Learner perspectives. Indonesian Journal of Applied Linguistics, 11(1), 11-20. https://doi.org/10.17509/ijal.v11i1.34614

Bishnoi, M.M., & Suraj, S. (2020). Challenges and implications of technological transitions: The case of online examinations in India. Paper presented at the 15th (IEEE) International Conference on Industrial and Information Systems (ICIIS), 540-545. https://doi.org/10.1109/ICIIS51140.2020.9342655

Bozkurt, A., & Sharma, R.C. (2020). Emergency remote teaching in a time of global crisis due to coronavirus pandemic. Asian Journal of Distance Education, 15, i-vi.

https://doi.org/10.5281/zenodo.3778083

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3, 77–101. https://doi.org/10.1191/1478088706qp063oa

Braun, V., & Clarke, V. (2012). Thematic analysis. American Psychological Association. https://doi.org/10.1037/13620-004

Broom, D. (2020, April 22). Coronavirus has exposed the digital divide like never before. https://www.weforum.org/agenda/2020/04/coronavirus-covid-19-pandemic-digital-divide-internet-data-broadband-mobbile/

Butler-Henderson, K., & Crawford, J. (2020). A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Computers & Education, 159, 104024. https://doi.org/10.1016/j.compedu.2020.104024

Chinembiri, T. (2020). Despite reduction in mobile data tariffs, data still expensive in South Africa. AfricaPortal. Despite Reduction in Mobile Data Tariffs, Data Still Expensive in South Africa (africaportal.org)

Cohen, L., Manion, L., & Morrison, K. (2018). Research methods in education (8th ed.). Routledge.

Coleman, V. (2021). Digital divide in UK education during COVID-19 pandemic: Literature review. Research report. Cambridge Assessment. https://files.eric.ed.gov/fulltext/ED616296.pdf

Fawaz, M., & Samaha, A. (2020). The psychosocial effects of being quarantined following exposure to COVID-19: A qualitative study of Lebanese health care workers. International Journal of Social Psychiatry, 66(6), 560-565. https://doi.org/10.1177/0020764020932202

Feng, X., & Behar-Horenstein, L. (2019). Maximising NVivo utilities to analyse open-ended responses. The Qualitative Report, 24(3), 563-571. https://doi.org/10.46743/2160-3715/2019.3692

Genis-Gruber, A., & Weisz, G. (2022). Challenges of online exam systems in the COVID-19 pandemic era: E-Assessment of perception, motivation, and performance. In Measurement methodologies to assess the effectiveness of global online learning (pp. 108-136). https://doi.org/10.4018/978-1-7998-8661-7.ch005

Govindarajan, V., & Srivastava, A. (2020). What the shift to virtual learning could mean for the future of higher ed. Harvard Business Review, 31(1), 3-8. https://hbr.org/2020/03/what-the-shift-to-virtual-learning-couldmean-for-the-future-of-higher-ed

Hillier, M. (2014). The very idea of e-Exams: Student (pre) conceptions. In B. Hegarty, J. McDonald, & S.-K. Lok (Eds.), Rhetoric and reality: Critical perspectives on educational technology. Proceedings of the Australasian Society for Computers in Learning in Tertiary Education (Ascilite) (pp. 77-88). https://ascilite.org/conferences/dunedin2014/files/fullpapers/91-Hillier.pdf

Houlden, S., & Veletsianos, G. (2019). A posthumanist critique of flexible online learning and its “anytime anyplace” claims. British Journal of Educational Technology, 50(3), 1005-1018. https://doi.org/10.1111/bjet.12779

Iivari, N., Sharma, S., & Ventä-Olkkonen, L. (2020). Digital transformation of everyday life — How COVID-19 pandemic transformed the basic education of the young generation and why information management research should care? International Journal of Information Management, 55, 102-183. https://doi.org/10.1016/j.ijinfomgt.2020.102183

Ilgaz, H., & Afacan Adanır, G. (2020). Providing online exams for online learners: Does it really matter for them? Education and Information Technologies, 25(2), 1255-1269. https://doi.org/10.1007/s10639-019-10020-6

Khan, S., Khan, R.A. (2019). Online assessments: Exploring perspectives of university students. Education and Information Technologies, 24, 661–677. https://doi.org/10.1007/s10639-018-9797-0

Khan, M.A., Vivek, V., Khojah, M., Nabi, M.K., Paul, M., & Minhaj, S.M. (2021). Learners’ perspective towards e-exams during COVID-19 outbreak: Evidence from higher educational institutions of India and Saudi Arabia. International Journal of Environmental Research and Public Health, 18(12), 6534, 1-18. https://doi.org/10.3390/ijerph18126534

Khare, A., & Lam, H. (2008). Assessing student achievement and progress with online examinations: Some pedagogical and technical issues. International Journal on E-learning, 7(3), 383-402. https://www.learntechlib.org/primary/p/23620/

Lembani, R., Gunter, A., Breines, M., & Dalu, M. T. B. (2020). The same course, different access: The digital divide between urban and rural distance education students in South Africa. Journal of Geography in Higher Education, 44(1), 70-84. https://doi.org/10.1080/03098265.2019.1694876

Munoz, A., & Mackay, J. (2019). An online testing design choice typology towards cheating threat minimisation. Journal of University Teaching and Learning Practice, 16(3). https://doi.org/10.53761/1.16.3.5

Muzaffar, A.W., Tahir, M., Anwar, M.W., Chaudry, Q., Mir S. R., & Rasheed, Y. (2021). A systematic review of online exams solutions in e-learning: Techniques, tools, and global adoption. IEEE Access, 9, 32689-32712. https://doi:org/10.1109/ACCESS.2021.3060192

Ng, C.K.C. (2020). Evaluation of academic integrity of online open book assessments implemented in an undergraduate medical radiation science course during COVID-19 pandemic. Journal of Medical Imaging and Radiation Sciences, 51(4), 610-616. https://doi.org/10.1016/j.jmir.2020.09.009

Ngqondi, T., Maoneke, P., & Mauwa, H. (2021). A secure online exams conceptual framework for South African universities. Social Sciences & Humanities Open, 3, 100-132. https://doi.org/10.1016/j.ssaho.2021.100132

Radu, M.C., Schnakovszky, C., Herghelegiu, E., Ciubotariu, V.A., & Cristea, I. (2020). The impact of the COVID-19 pandemic on the quality of educational process: A student survey. International Journal of Environmental Research and Public Health, 17(21), 7770.

https://doi.org/10.3390/ijerph17217770

Rahim, A.F.A. (2020). Guidelines for online assessment in emergency remote teaching during the COVID-19 pandemic. Education in Medical Journal, 12(2), 59-68. https://doi.org/10.21315/eimj2020.12.2.6

Reedy, A., Pfitzner, D., Rook, L., & Ellis, L. (2021). Responding to the COVID-19 emergency: Student and academic staff perceptions of academic integrity in the transition to online exams in three Australian universities. International Journal for Educational Integrity, 17(9), 2-32. https://doi.org/10.1007/s40979-021-00075-9

Shraim, K. (2019). Online examination practices in higher education institutions: Learners’ perspectives. Turkish Online Journal of Distance Education, 20(4), 185-196. https://doi.org/10.17718/tojde.640588

Sulla, V., Zikhali, P., & Cuevas, P.F. (2022). Inequality in Southern Africa: An assessment of the Southern African Customs Union (English). World Bank Group. http://documents.worldbank.org/curated/en/099125303072236903/P1649270c02a1f06b0a3ae02e57eadd7a82

Thomas, C.L., & Cassady, J.C. (2021). Validation of the state version of the state-trait anxiety inventory in a university sample. SAGE Open, 11(3). https://doi.org/10.1177/21582440211031900

UNESCO. (2020). Exams and assessments in COVID-19 crisis: Fairness at the center.https://en.unesco.org/news/exams-and-assessments-covid-19-crisis-fairness-centre

van den Berg, G. (2021). The role of open distance learning in addressing social Justice: A South African case study. In Social Justice and Education in the 21st Century (pp. 331-345). Springer, Cham. https://doi.org/10.1007/978-3-030-65417-7_17

van Dijk, J.A. (2002). A framework for digital divide research. The Electronic Journal of Communication, 12 (1&2), 1-7. Volume 12 Numbers 1.pdf (utwente.nl)

van Dijk, J.A. (2017). Digital divide: Impact of access. The International Encyclopedia of Media Effects, 1-11. https://doi.org/10.1002/9781118783764.wbieme0043

Authors:

Piera Biccard holds a PhD in curriculum studies and is a lecturer in the Department of Curriculum and Instructional Studies, UNISA. She lectures honours students and publishes in the field of mathematics education and the development of mathematics teachers. She holds an NRF rating in this field. She has also authored publications in the field of Open Distance Education—a subject that has captured her interest and expertise in recent times. Email: biccap@unisa.ac.za

Patience Kelebogile Mudau, Ph.D., is an Associate Professor in the Department of Curriculum and Instructional Studies, College of Education, UNISA. She currently teaches two modules of the MEd in Open Distance learning (ODL); Curriculum Development in ODL and Management in ODL. She is involved in the coordination of the Online Teaching and Learning Certificate of Advanced Studies (CAS), emanating from the Memorandum of Agreement between UNISA and Oldenburg universities. Her research interests are Open Distance e-Learning, Open Education Practices and Technology enhanced learning. Email: mudaupk@unisa.ac.za

Geesje van den Berg is a full Professor in the Department of Curriculum and Instructional Studies, UNISA and a Commonwealth of Learning Chair in open distance learning (ODL) for Teacher Education. Her research focuses on student interaction, academic capacity building, openness in education, and teachers’ use of technology in ODL. She has published widely as a sole author and co-author with colleagues and students in curriculum studies and ODL. She leads a collaborative academic capacity-building project for UNISA academics in ODL between Carl von Ossietzky University of Oldenburg in Germany and UNISA. She is the programme manager of the structured Master’s in Education (ODL) programme and teaches two modules. Numerous master's and doctoral students have completed their studies under her supervision. Email: vdberg@unisa.ac.za

Cite this paper as: Biccard, P., Mudau, P.K., & van den Berg, G. (2023). Student perceptions of online examinations as an emergency measure during Covid-19. Journal of Learning for Development, 10(2), 222-235.