2020 VOL. 7, No. 1

Abstract: The EU-funded TeSLA project — Adaptive Trust-based e-Assessment System for Learning (http://tesla-project.eu) — has developed a suite of instruments for e-Authentication. These include face recognition, voice recognition, keystroke dynamics, forensic analysis and plagiarism detection, which were designed for integration within a university's virtual learning environment. These tools were trialed across the seven partner institutions: 4,058 participating students, including 330 students with special educational needs and disabilities (SEND); and 54 teaching staff.

This paper describes the findings of this large-scale study where over 50% of the students gave a positive response to the use of these tools. In addition, over 70% agreed that these tools were “to ensure that my examination results are trusted” and “to prove that my essay is my own original work”. Teaching staff also reported positive experiences with TeSLA: the figure reaching 100% in one institution. We show there is evidence that a suite of e-authentication tools such as TeSLA can potentially be acceptable to students and staff and be used to increase trust in online assessment. Also, that while not yet perfected for SEND students it can still enrich their experience of assessment. We find that care is needed when introducing such technologies to ensure building the layers of trust required for their successful adoption.

Keywords: assessment, cheating, plagiarism, e-authentication.

Many traditional face-to-face universities are beginning to provide online learning options for some of their teaching. This has meant that there are academic integrity issues that need to be addressed when embarking upon online assessment. These issues are primarily focused around student identity but also include plagiarism. They have, of course, existed for as long as assessment, as there are evolutionary drivers to these behaviours (Wade & Breden, 1980). These confounding issues of identity and cheating, through attempting to pass someone else's work as one's own, can be described as problems associated with the authentication of individual students.

Solutions to authentication issues of this nature include implementing high levels of security to ensure the correct person sits an assessment and does not cheat during that event. Conventionally, this is achieved through identity checking by invigilators and the assessment is duly proctored to ensure no cheating takes place. The high levels of resources required for this type of formal assessment means it is only suitable for infrequent, high-stakes assessment. It is, therefore, important to persuade students not to attempt to cheat through the promotion of good academic practice and in this way promoting academic integrity.

McCabe, Trevino and Butterfield (2001) provide a list of 10 principles of academic integrity for faculty. They are quoted here for convenience:

Interestingly, their list from the student perspective includes the provision of deterrents with “harsh penalties” given in the examples. In most institutions the penalties will likely be determined at the institutional, rather than the faculty level. While this list was written as a reflection of the previous decade and before the boom in online learning, each of the items resonates in today’s higher education environment. A more recent study written firmly in the contemporary technological context does not attempt to modify this list of principles. Van Veen and Sattler (2018) do, however, aim to deepen our understanding of the role of deterrence while suggesting other factors also fit within the local context. The European Commission funded the Innovation Alliance (http://www.innovationalliance.eu), which promotes academic integrity as having five interconnected key values. These are: faith (or trust), fairness, respect, honesty, sense of responsibility which also chime with McCabe et al’s 10 principles.

The rapid increase in both online tuition and the opportunities for online assessment require little significant rethinking of the majority of the 10 principles above, other than extending what we consider to be the classroom and the campus: in Principles 4 and 10. Trust, as mentioned in Principle 4, requires the rethinking of the notion of a classroom radically changed from a real space containing a real time interaction between teachers and students, to a virtual space where asynchronous interactions are taking place without geographical coincidence, mediated through the Internet. Also, student expectations, as described in Principle 6, will not have remained unchanged. Furthermore, online assessment provides a range of opportunities to engage in dishonesty (Principle 8). Dishonesty in the context of e-assessments is commonly known as cheating, which is defined by Bartley (2005, p. 27) as “all deceptive or unauthorised actions”. However, through Principle 7, there are new opportunities to develop ‘fair and relevant’ assessments. In the following section two different approaches to online assessment which address these challenges and opportunities is considered.

There are now well-developed online proctoring solutions that enable institutions to manage full examinations with their students dispersed geographically and engaging in e-assessments. For some universities this could be the primary form of assessment used. For example, Western Governors University (WGU) in Utah, USA, states that, “Thanks to webcam technology and online proctoring services, WGU enables you to take tests from the comfort, privacy, and convenience of your home or office ...” using remotely proctored assessments (WGU, 2020). This is seen as an alternative mode for a traditional examination model of assessment and expectations are set accordingly. The notion of the exam hall is extended to include every space in which a student is being examined. The opportunities to cheat are reduced through a combination of recordings. These may include the use of multiple cameras, microphones and screen recorders. The ratio of students to proctors is set to satisfy an institution’s appetite for risk and will be a factor contributing to the overall trust in the system.

A different approach is required for lower stake e-assessments which are more frequent but can still contribute to a student’s overall mark and grade. Both kinds of assessment are supported by the Trust-based authentication and authorship e-authentication analysis, TeSLA (an adaptive trust-based e-assessment system for learning) suite of tools. This paper reports on the findings from the large-scale testing of this suite of e-authentication tools, which was developed as part of the European Horizon’s 2020 Project. It created a system to support authentication and authorship checking for e-assessments.

The European Commission funded a three-year project that was successfully completed at the end of May 2019. It brought together eighteen different partner organisations, including seven universities to trial the tools, teaching in seven different languages. The goal was to develop and pilot an online suite of tools that would become a commercially viable solution for institutions to improve trust in online assessment through the e-authentication of students’ work.

The project developed several e-authentication instruments, each of which could be implemented in a Learning Management System (e.g., Moodle) as part of higher education’s online provision. In other words, the TeSLA system is modular, allowing individual higher education institutions to implement their choice of TeSLA instruments according to their local needs.

The TeSLA instruments can be categorised as either biometric or text analysis type tools:

Biometric Tools

Textual Analysis Tools

The software development went through several iterations, which were evaluated in three piloting phases (Pilot 1, Pilot 2, Pilot 3) undertaken in each of the seven universities (including face-to-face, blended and online learning environments). Studying the implementation of TeSLA in universities across six countries, each with its own complex context, also enabled the project to help those universities address the changes in personal data legislation (GDPR) which came into effect after Pilot 1 (in May 2016). Through this iterative process, in addition to changes that were implemented in both the software and the testing protocols, there were several pedagogical developments, including a typology of supported assessments.

In summary, the pilots were designed to:

The pilots also provided snapshots into the potential uses of the TeSLA software. Pilot universities also investigated how TeSLA could enhance users’ trust in an online e-authentication system by monitoring student identities when undertaking e-assessments. However, it is important to note that in the earlier pilots (Pilots 1 and 2), the software was by design still in development. Accordingly, in those earlier pilots, the range of assessments was restricted and there was only access to a limited amount of data. Nonetheless, in Pilots 3a and 3b, student numbers increased, and more data became available. While a technical team continued development of the suite, three pilot studies of increasing scope informed this development and tested the tools in a range of situations, from assessment sessions in class at campus-based universities to TeSLA enabled assessments in real distance learning courses at online institutions. This paper describes the methods used to evaluate the pilot studies and discusses the findings.

The seven universities that trialed the TeSLA suite were:

Distance Learning Institutions: The Open University of Catalunya (UOC); Open University, Netherlands (OUNL); Open University, United Kingdom (OUUK)

Blended Learning Institutions: Anadolu University (AU), University of Jyväskylä (JYU)

Face-to-Face Universities: Sofia University (SU); Technical University, Sofia. (TUS)

The overarching research questions for the three studies were:

The three pilot studies consecutively ran during each year of the project.

Pilot 1, Year 1, aims: Test the pilot communication protocol, and test the technical implementation protocol. Target number of student participants was 600. Data was collected through interviews with participants and used to improve the two protocols.

Pilot 2, Year 2, aim: Test the TeSLA instruments in an isolated manner in assessment activities.

The target number of student participants was 3,500. Partners developed four questionnaires. One pair for student participants and the other for staff participants. Each participant completed one questionnaire before they engaged with TeSLA and the second after they had engaged. These provided pre- and post-responses and included some free text responses. The data provided feedback for the technical team, the pilot university teams, and for improving the questionnaires for Pilot 3.

Pilot 3, Year 3, aim: Test the full integration of the TeSLA system and its scalability. Target number of student participants: Phase 1 – 7,000, Phase 2 – 10,000.

The first two pilots were in effect intermediate development steps for the final Pilot 3.

The numbers involved in the Pilot studies were as set out in Table 1 and met more than the set target. This table includes the numbers of participants with special educational needs and disabilities (SEND).

Table 1: The number of student participants, teachers and courses for each of the three pilots, including the number of SEND students

Pilot |

Students |

SEND Students |

Teachers |

Courses |

1 |

637 |

24 |

22 |

24 |

2 |

4,931 |

287 |

43 |

125 |

3 |

17,373 |

550 |

392 |

310 |

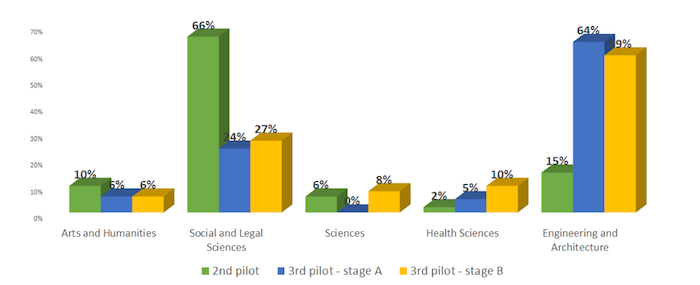

Figure 1 shows the spread of participants across each subject area for each pilot study. While in Pilot 3, most participants were studying engineering and architecture, good numbers of participants were drawn from each of the other subject areas.

Figure 1: The spread of student participants across subjects, by pilot.

For Pilots 2 and 3.

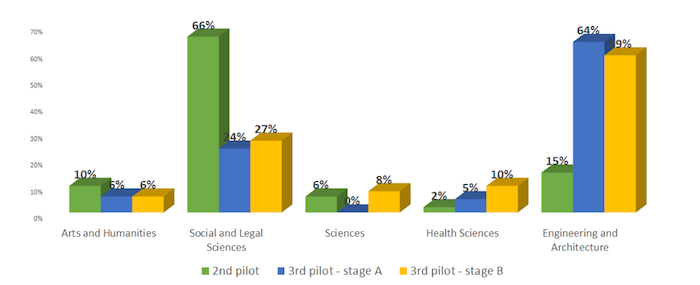

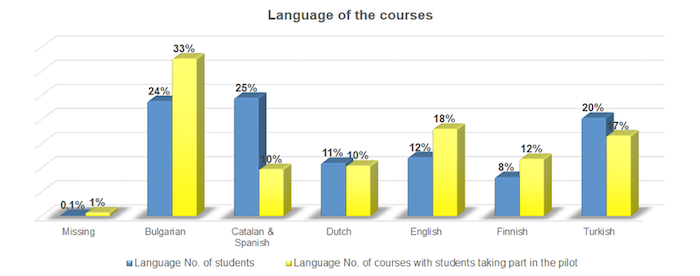

The spread of participants, for Pilot 3, across the seven languages of the project is shown in Figure 2. This illustrates a fair representation for each language across the whole sample

Figure 2: The spread of student participants across the seven languages in Pilot 3.

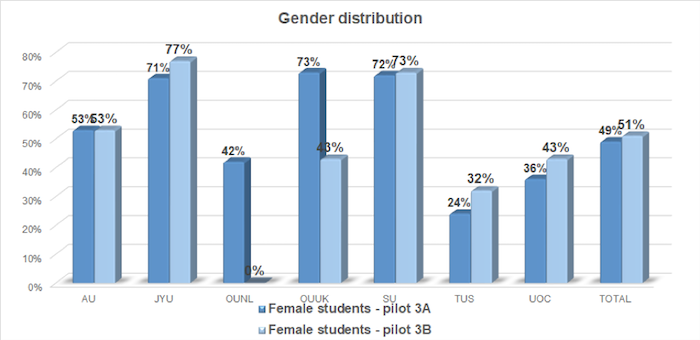

The gender distribution is revealed in Figure 3, where it shows the percentage of female students by institution for each of the two phases of Pilot 3. While there are variations from institution to institution, there is an overall balance between female and male students.

Figure 3: The percentage of female participants for Pilots 3a and 3b by institution.

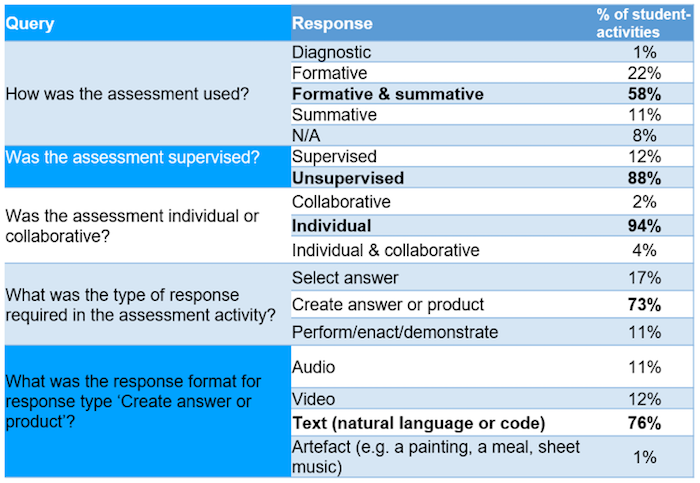

A range of assessments were included in Pilot 3. There was a spread of formative and summative assessment, with the majority serving both purposes of development and grading. Most of the assessment was individual, unsupervised, and involved the creation of text artifacts. The audio tools were used for language testing and the video tool assisted with collaborative assessment.

Table 2: Summary details for assessment in Pilot 3

The student views on their experience of the TeSLA system were based upon the experiences of 11,102 unique students who used the TeSLA system responses from the 2,222 who completed pre- and post-intervention questionnaires.

Participants of the pilot studies described a positive acceptance of TeSLA instruments and believed that they would increase students’ awareness of cheating and plagiarism and the trustworthiness of e-assessment but would not eliminate the possibility of fraud. The overall students’ experience with the TeSLA instruments was positive for more than 50% of the students from all partner universities, with more than 70% considering the key advantages of e-assessment with e-authentication to be: “to ensure that my examination results are trusted” and “to prove that my essay is my own original work”.

Most of the students who were interviewed reported no issues with the TeSLA instruments and managed to complete the assessment activities without the help of a teacher. For example: “Students were able to finish the tasks quite independently. There were no major technical problems.” “None of the students highlighted any technical issue and that the only difficulty mentioned was to enable the camera on a laptop.” “Five (out of nine) of the students at Sofia University said they did not encounter any problems when using the instruments from TeSLA system and they succeeded in completing the enrolment and follow-up on their own.”

Three main factors contributed to the ease of use of the TeSLA system:

Pilot leaders reported that most of the students interviewed faced no issues with the TeSLA instruments and managed to complete the assessment activities without the help of a teacher. For example: “Students were able to finish the tasks quite independently. There were no major technical problems.” “None of the students highlighted any technical issue and that the only difficulty mentioned was to enable the camera on a laptop.”

However, 5% to 19% of students faced technical problems in all institutions such as “difficulties to understand in which order the tasks should have been completed”, which was made more difficult by the students’ lack of experience in using Moodle (JYU). “Browser problems. Chrome and Explorer not working”.

Another example is that some students had difficulty understanding what behaviour was considered acceptable during system activation. For some, the length of enrolment text (250 words) that the students had to type for the Keystroke Dynamics instrument was a problem: “It was hard to make up 250 words,” “250 words to write was pretty much. If you only do it once, it’s ok.”

Some students found that the completion of the project surveys was a significant additional burden on top of using the TeSLA system. Consequently, in some institutions many students were willing to use the TeSLA system but were unwilling to complete the surveys.

Some students raised issues about surveillance and tensions due to a fear of the power differential (between universities/students) and the opacity (it was unclear how the system made its decisions). This varied according to the student's digital literacy (computer science students were more comfortable in using the system).

Some students expressed difficulties in trusting the reliability of the biometric instruments. For example, some students feared a malfunctioning of the biometricbiometric tools, while others were afraid that in the long run the system would no longer recognise them.

Some students had difficulty understanding what behaviour was considered acceptable during system activation. This was due to a lack of clarity as to the definition of cheating in force.

In Pilot 3, 40% of students on average were not sure about the nuances of cheating and plagiarism. Qualitative data suggested that the students needed to increase their understanding about:

By applying a range of flexible strategies, considering the specifics of the institutional contexts and institutional regulations or legal restrictions, all partner institutions managed to involve SEND students in the pilots and were able to study their experiences with TeSLA. Most participating SEND students were identified in the pre-pilot questionnaire, in which students were asked to voluntarily self-identify any disabilities. Other methods used included personal invitations of known SEND students, establishing a SEND-specific course, and using institutional services that already work with SEND students. The most effective strategy was approaching the students individually and motivating them, firstly, to take part in TeSLA testing and, secondly, to share their experience with their teacher.

The most reliable and informative way to identify specific issues SEND students faced in using the TeSLA instruments tended to be individual sessions monitored by a teacher, in which the student tested each individual instrument, discussed with the teacher the specific problems and gave specific recommendations for improvement of the accessibility and usability for this particular instrument.

Reasons for not participating given by SEND students included: lack of time, being busy with the exams, having health problems, the consent form, technical problems (such as not having the required webcam and microphone or having access difficulties), and not seeing any advantage in using TeSLA as their university already offered several benefits for SEND students (such as online final exams instead of on-site exams).

Nonetheless, all SEND students who did participate appreciated the availability of the TeSLA system, which they saw as:

However, although most of the SEND students appreciated the opportunities that TeSLA provided for conducting exams from home on certain occasions, they did not see online assessment as an alternative to face-to-face assessment because this would limit opportunities to socialise with other students.

In fact, many of the usability and accessibility issues raised by SEND students related to the VLE in which TeSLA was integrated rather than to the TeSLA system itself. The technical team argued that TeSLA could not be expected to address issues which arise from the VLE rather than from TeSLA. The students’ experiences and opinions of the accessibility and usability of the TeSLA system and its various instruments varied a great deal according to the type and degree of their disability. The data suggests that, due to the heterogeneity of SEND students and the specificity of different disability groups, it is not possible for a system such as TeSLA to satisfy equally the needs of such a diverse group of learners in terms of accessibility and usability. The two groups of SEND students that were most vulnerable in this respect were the vision impaired students and the deaf students.

The other main users of the system were the teachers and, in the implementation of the TeSLA system, partners applied a student-centred pedagogical approach (see ESG et al, 2015; Bañeres et al, 2016) for the e-assessment activities. The assessments were designed to allow the students to reveal their skills and their understanding of the different knowledge domains to the best of their abilities. The students also received some guidance to support their trust in the TeSLA system and its instruments, including some ways to address technological problems that they might encounter.

The implementation of the TeSLA system in university processes raised questions from a pedagogical point of view. Teaching staff identified a range of obstacles that would make it more difficult to apply TeSLA in their courses:

Some instruments were harder to implement for particular assessment activities, despite the presumed agnostic approach of the TeSLA instruments. For example, keystroke dynamics and forensic analysis were useful in assessments where students were required to write long texts (e.g., reports, essays, and open questions). However, in assessments that mostly require short text responses (e.g., mathematics and computer sciences), they were not so appropriate. Voice and face recognition were sometimes found difficult to implement, depending on the existing assessment practices (e.g., whether microphones and webcams were already used, and whether they were already available for each student). Overall, the teachers were generally enthusiastic about the modularity of the TeSLA system (the possibility to enable/disable the instruments as they wish, to take student needs and student feedback into account in different ways).

The analysis of how the TeSLA instruments were integrated into pilot courses provides a typology of approaches according to the design of the assessment activity and the needs of the institution to authenticate students and/or provide a check on the authorship of their written products.

The course and assessment scenarios described are based on actual successful practice by the pilot institutions. Other approaches can readily be imagined, and will no doubt develop as TeSLA is used in the future, but these scenarios provide a solid starting point, illustrating approaches that have been demonstrated to be practicable and to make effective use of the TeSLA instruments.

The approaches were:

The instruments mostly used by teaching staff were those that students found least intrusive (Forensic Analysis and Anti-Plagiarism). However, this was not the case for Pilots 3a and 3b taken together, where Face Recognition was the most used instrument. In fact, teaching staff used each of the available approaches to assessment design, which involved using the TeSLA instruments in all types of assessment: diagnostic, formative, summative, at home, at university, supervised and unsupervised, individual and collaborative, and with various response types (selection, performance and creation) and format (mouse click, text, sound, image and programming code) (Okada et al, 2018).

Most teaching staff from all institutions gave positive responses to questions about trust. For example, “it will increase the trust among universities and employers” and “it will help participants trust the outcomes of e-assessment”. In particular, teachers said that the Anti-Plagiarism and Forensic Analysis tools were shown to increase trust and academic integrity. Meanwhile, some teaching staff believed that authentication via the TeSLA system could be used as a tool to motivate students and make them more proactive (i.e., it can go beyond fighting cheating). The system was generally not perceived simply as a tool that automatically assesses situations in a binary way (e.g., cheating/no cheating) but rather as a continuous monitoring tool that gives indications and generally promotes good practices and trust.

The results from the TeSLA project included a final large-scale study that demonstrates the system can operate at scale and be successfully embedded within a university’s virtual learning environment (VLE). It should be noted that the nature of the pilots meant that participants were essentially self-selecting. This is because there was no requirement to participate, and, therefore, only those that wanted to signed the consent form and took part in tests. A further self-selection step took place at the post-questionnaire where, as there was no compulsion to complete it, some chose not to. Therefore, the findings cannot be considered completely representative of the student body as a whole.

Despite this caveat, there was broad representation in terms of language, subject, gender and special need or disability. The TeSLA system proved to be an acceptable intrusion for the majority of those who did take part. The SEND study showed that while generally welcomed, the TeSLA suite of tools could not fully support all students with special needs and disabilities.

Students were clear that they were more accepting of some of the tools than others. The Anti-Plagiarism and Forensic Analysis tools were most acceptable while the Facial and Voice Recognition tools were less accepted. This was likely, in part, to do with the effort required to set them up with their initial ‘enrolment’ data. Another factor is the much more personal nature of the data the tools collected and the fact this was usually not directly related to the work being undertaken.

Teaching staff were broadly satisfied with TeSLA, with many agreeing that “it will increase the trust among universities and employers” and “it will help participants trust the outcomes of e-assessment”.

Most teaching staff thought that TeSLA was easy-to-use and user-friendly (they particularly liked the fact that the system is modular, allowing some tools to be switched off as necessary). Some teaching staff acknowledged that some TeSLA tools were harder to use and to accommodate than others, some thought that it was sometimes cumbersome and impractical, and some thought that TeSLA sometimes required assessment activities to be redesigned. Teaching staff also acknowledged that there were some problems, some of which were technical, some of which were in the interface (ease of navigation) and requirements (e.g., the number of characters that had to be typed), some of which were cultural. However, teaching staff saw all these issues as being resolvable with the help of a colleague or technician, or with better instructions and tutorials, or with assistive tools. Teaching staff commented that a technical FAQ, data security and privacy awareness, guidelines for use and interpreting results, e-authentication and authorship verification policies were all necessary. Some teaching staff would have liked more detailed guidance on how they should interpret the TeSLA tool outcomes. Teaching staff thought that it was not possible for TeSLA to satisfy equally the accessibility and usability needs of SEND learners, who are, by definition, highly heterogenous. However, they believed that the interface should be designed to be usable with assistive technologies such as screen readers.

With respect to the research question about the users’ experience of the TeSLA system, students and teachers were broadly positive about their experience using the TeSLA instruments. In particular, students were willing to share various types of personal data, while both students and teachers believed that the TeSLA system would increase trust in the e-assessments. Teachers did find some instruments harder to implement, however, they were generally enthusiastic about the modularity of the TeSLA system.

With respect to the research question “Does the TeSLA system meet stakeholders’ expectations?”, and based on extensive conversations with institutional stakeholders about TeSLA and the level of interest they have shown, it is clear that TeSLA exceeds their expectations.

There does remain a question mark about deployment costs and data privacy that are common to any similar technology. That said, institutional stakeholders recognise the importance of bringing more trust to existing fully online programs and also of bringing more flexibility to traditional face-to-face delivery. Flexibility and adaptability to individual needs and regulation are probably the most relevant components of the solution for institutional stakeholders to date.

TeSLA is opening a brand-new segment in the educational technology space. While there are individual technologies that tackle some of the challenges that TeSLA addresses, no one has invested effort in creating a holistic, interoperable trust framework. This means that, unlike other commercial players, TeSLA can leverage different and new biometric technologies as they are developed. Also, TeSLA can adapt to different regulation, IT/cloud and privacy preferences.

Reflecting on the principles for academic integrity (McCabe, Trevino & Butterfield, 2001), online proctoring has a particular niche for infrequent, high stakes assessment where it can operate as a deterrent and allow conventional examinations to be undertaken with an extended examination hall to encompass every student under examination. The TeSLA suite of e-authentication tools, with its ability to become seamlessly embedded within an institution’s VLE, can deter cheating and build trust in online assessment, from within the existing ‘classroom’. TeSLA can also offer an opportunity; in that it can facilitate new forms of online assessment and allow more relevant forms of assessment to be created.

It can therefore be envisaged, that courses will be designed around the TeSLA technology with the greater variety of relevant assessment that it enables. With clear information, policy and guidance these developments could establish the ‘layers of trust’ (Edwards et al, 2018) that would make e-authentication generally acceptable. This will lead to greater trust in the results of online assessment.

Finally, we have not forgotten that these technologies (like so many others) are not yet sufficiently mature that they can fully support all SEND students. Therefore, alternatives should continue to be developed to ensure all students have a rich and rewarding learning and assessment experience.

Acknowledgement: We are grateful to the European Commission for funding this project, the TeSLA pilot leaders, and consortium team. This work is supported by the H2020-ICT-2015/H2020-ICT-2015, Number 688520.

The full list of partners is:

Agencia per a lat Qualitat del sistema Universitari de Catalunya (AQU), Anadolu Universitesi (AU), European Association for Quality Assurance in Higher Education (ENQU), European Quality Assurance Network for Informatics Education (EQANIE), IDIAP, Imperial College London, INAOE, Institut Mines-Telecom (IMT), LPLUS GmbH, Open Universotiet (OUNL), ProtOS, Sofia University (SU), Technical University of Sofia (TUS), Universitat Oberta De Catalunya (UOC), Universite de Namur (UNamur), University of Jyvaskyla (JYU), Watchful.

Bañeres, D., Rodríguez, M Elena, Guerrero-Roldán, & A.-E., Baró, X. (2016). Towards an adaptive e-assessment system based on trustworthiness. In S. Caballé & R. Clarisó, (Eds.) Formative assessment, learning data analytics and gamification. Boston: Academic Press, pp. 25-47. Retrieved from doi: https://doi.org/10.1016/B978-0-12-803637-2.00002-6.

Bartley, J. M. (2005). Assessment is as assessment does: A conceptual framework for understanding online assessment and measurement. In S. Howell, & M. Hricko (Eds.), Online assessment and measurement: foundations and challenges (pp. 1-45). Hershey, PA: IGI Global. Retrieved from doi:10.4018/978-1-59140-720-1.ch001

Edwards, C., Whitelock, D., Okada, A., & Holmes, W. (2018). Trust in online authentication tools for online assessment in both formal and informal contexts. In L. Gómez Chova, A. López Martínez, & I. Candel Torres (Eds.), ICERI2018 Proceedings (pp. 3754–3762). Seville: IATED Academy.

ESG: European Students’ Union (ESU) (Belgium), European University Association (EUA) (Belgium), European Association of Institutions in Higher Education (EURASHE) (Belgium) and European Association for Quality Assurance in Higher Education (ENQA) (Belgium) (2015). Standards and guidelines for quality assurance in the European higher education area (ESG), Bruxelles, Belgium, European Students’ Union (Online). Retrieved from http://www.esu-online.org

Innovation Alliance (2019). Academic integrity. Retrieved from http://www.innovationalliance.eu/de/1-0- understanding-academia/1-2-understanding-academics/1-2-2-academic-integrity/

McCabe, D. L., Trevino, L. K., & Butterfield, K. D. (2001). Cheating in academic institutions: A decade of research. Ethics & Behavior, 11(3), 219–232. Retrieved from https://doi.org/10.1207/S15327019EB1103_2

Okada, A., Whitelock, D., Holmes, W., & Edwards, C. (2018). Student acceptance of online assessment with e-authentication in the UK. British Journal of Educational Technology, BJET, (Early access).

Van Veen, F., & Sattler, S. (2018). Modeling updating of perceived detection risk: The role of personal experience, peers, deterrence policies, and impulsivity. Deviant Behavior. https://doi.org/10.1080/01639625.2018.1559409

Wade, M. J., & Breden, F. (1980). The evolution of cheating and selfish behavior. Behavioral Ecology and Sociobiology, 7(3), pp. 167-172. Retrieved from https://www-jstor-org.libezproxy.open.ac.uk/stable/4599323?seq=1#metadata_info_tab_contents

WGU (2020). Assessments allow students to prove their competency. University web site. Retrieved from https://www.wgu.edu/admissions/student-experience/assessments.html

Authors:

Denise Whitelock is the Interim Director of the Institute of Educational Technology at the Open University, UK. She is a Professor of Technology Enhanced Assessment and Learning and has over thirty years’ experience in AI for designing, researching and evaluating online and computer-based learning in Higher Education.

For a complete publication list see: http://oro.open.ac.uk/view/person/dmw8.html

Email: denise.whitelock@open.ac.uk

Chris Edwards is a lecturer in the Open University's Institute of Educational Technology. In addition to this research into student authentication, he chairs the Masters module Openness and innovation in eLearning and also supports Quality Enhancement activities focussing on using new data methods and structures to better understand student study choices.

For a complete publication list see: http://oro.open.ac.uk/view/person/che2.html

Email: chris.edwards@open.ac.uk

Alexandra Okada is an educational researcher of the Faculty of Wellbeing, Education & Language Studies at The Open University, UK and a senior fellow of the Higher Education Academy. Her expertise lies at intersections between Technology enhanced learning and Responsible Research and Innovation.

For a complete publication list see: http://oro.open.ac.uk/view/person/alpo3.html

Email: ale.okada@open.ac.uk

Cite this paper as: Whitelock, D., Edwards, C., & Okada, A. (2020). Can e-Authentication Raise the Confidence of Both Students and Teachers in Qualifications Granted through the e-Assessment Process? Journal of Learning for Development, 7(1), 46-60.