2020 VOL. 7, No. 1

Abstract: This mixed-methods study examines the design and delivery of MOOCs to facilitate student self-monitoring for self-directed learning (SDL). The data collection methods included an online survey (n = 198), semi-structured interviews of MOOC instructors (n = 22), and document analysis of MOOCs (n = 22). This study found that MOOC instructors viewed self-monitoring skills vital for SDL. MOOC instructors reported that they facilitated students’ self-monitoring with both internal and external feedback. Students’ internal feedback is related to cognitive and metacognitive processes. Among the methods used to facilitate cognitive processes were quizzes, tutorials, learning strategies, learning aids, and progress bars. To foster metacognition, MOOC instructors provided reflection questions and attempted to create learning communities. In addition, MOOC instructors, teaching assistants, and peers provided external feedback for students’ self-monitoring. Among the other strategies, synchronous communication technologies, asynchronous communication technologies, and feedback were used with diverse purposes in supporting students’ self-monitoring.

Keywords: Massive Open Online Courses (MOOCs), self-monitoring, self-directed learning, instructional design, MOOC instructors.

Self-directed learning (SDL) is considered highly important for learners in massive open online courses or MOOCs (Bonk, Lee, Reeves & Reynolds, 2015; Kop & Fournier, 2011; Terras & Ramsay, 2015). Such views on learning make sense given the wealth of content and learning resources that can be made available in a MOOC (Bonk et al, 2015; Zhang, Bonk, Reeves & Reynolds, 2020). Without some ability to self-direct one’s learning, many opportunities to enhance one’s job outlook or career advancement become increasingly limited as, increasingly, learning resources are primarily found online (Bonk, 2009). In addition, advances in open educational resources (OER) means that more resources are freely available. Nevertheless, wise decisions on accessing and using such resources requires extensive self-regulation, planning, self-monitoring, and self-assessment (Zhu & Bonk, 2019a).

However, SDL does not mean that instructors should leave learners to themselves. On the contrary, it is conceivable that they require even more forms of guidance and support to make wise and efficient decisions. In fact, researchers from the field of nursing have stated that instruction and guidance on SDL at the beginning of higher education courses is necessary as learners feel anxious about SDL (Hewitt-Taylor, 2001; Lunyk-Child, Crooks, Ellis, Ofosu, & Rideout, 2001; Prociuk, 1990). Consequently, instructor facilitation strategies are vital to help learners develop the appropriate SDL skills (Kell & Deursen, 2002; Lunyk-Child et al, 2001).

Based on Garrison’s (1997) framework designed at the University of Calgary, SDL includes three dimensions: (1) motivation, (2) self-management, and (3) self-monitoring. Self-monitoring refers to monitoring the learning strategies, processes, and the ability to think about thinking. It is related to cognitive and metacognitive processes. For example, learners can evaluate their learning and conduct self-reflection. Studies by Chang (2007) and Coleman and Webber (2002) argued that self-monitoring can improve learning performance. In effect, as still other studies show, teaching self-monitoring skills will benefit learners (e.g., Delclos & Harrington, 1991; Maag et al, 1992; Malone & Mastropieri, 1991; Schunk, 1982) and result in improved educational outcomes.

However, previous studies indicate that research on the instructional design as well as the actual delivery of MOOCs from the perspective of MOOC instructors is lacking (Margaryan, Bianco, & Littlejohn, 2015; Ross, Sinclair, Knox, Bayne, & Macleod, 2014; Watson et al, 2016; Zhu, Sari, & Lee, 2018). While there is MOOC research from the learner perspective, what is especially lacking is research on facilitating student self-monitoring from an instructor perspective.

In response to these research gaps, this study investigated the design and delivery of MOOCs to facilitate learners’ self-monitoring skills from instructors’ perspectives. Importantly, different technologies used to facilitate such self-monitoring are also addressed. The purpose of this study, therefore, is to better understand how MOOC instructors facilitated self-monitoring skills while designing and delivering MOOCs.

The following two research questions were the primary mechanisms that guided this study.

As mentioned, Garrison’s (1997) three-dimensional model of SDL was used as the main theoretical framework of this study. The framework includes: (1) self-management (i.e., task control); (2) self-monitoring (i.e., cognitive responsibility); and (3) motivation (i.e., entering motivation and task motivation). Self-monitoring, as a prerequisite of SDL, is the focus of this particular study. Self-monitoring involves both cognitive and metacognitive processes. Given that internal self-monitoring alone is not sufficient to promote cognitive improvement, external feedback from instructors is predicted to support learners’ self-monitoring (Garrison, 1997); that was also expected to be the case in this MOOC study.

Chang (2007) stated that self-monitoring refers to learners’ skills to track and evaluate their progress towards their learning goals. By tracking and evaluating their own learning behavior, learners could have a better understanding of the learning material (Coleman & Webber 2002; Zimmerman et al, 1995). Previous research has indicated that self-monitoring can improve learners’ classroom behavior and academic performance (Coleman & Webber, 2002; Lan, 1996). Furthermore, self-monitoring not only improves learning but also increases students’ self-efficacy of learning (Zimmerman, 1995) and gets them self-motivated.

Given that many prior empirical studies have indicated that teaching self-monitoring skills will be beneficial for learners (e.g., Delclos & Harrington, 1991; Maag et al, 1992; Malone & Mastropieri, 1991; Schunk, 1982), there is a need today to continue this vein of research by exploring when, if, and how self-monitoring occurs as well as how it can be enhanced in new and emerging open and online forms of distance learning such as MOOCs.

Early researchers, Zimmerman and Paulsen (1995), proposed four steps to improve students’ self-monitoring skills. These phases are: (1) basis of self-monitoring, (e.g., collecting data about the academic activity); (2) structured self-monitoring (e.g., using a structured monitoring protocol provided by the instructor); (3) independent self-monitoring (e.g., adapting the course-related self-monitoring protocol for personal needs); and (4) self-regulated self-monitoring (e.g., developing self-monitoring protocols by learners themselves).

SDL and Self-monitoring in MOOCs

Many researchers have argued that SDL is a key component in effective adult education (Brockett & Hiemstra, 1991; Candy, 1991; Garrison, 1997; Merriam, 2001). Given that most MOOC learners are adults (Shah, 2017), SDL is considered an important element in the learning environment of MOOCs (Bonk et al, 2015; Kop & Fournier, 2011; Terras & Ramsay, 2015). As a result, researchers are becoming increasingly interested in SDL in MOOCs (Bonk et al, 2015). These researchers are often focused on the general perceptions of SDL from students’ perspectives (Bonk et al, 2015; Loizzo, Ertmer, Watson & Watson, 2017) as well as the relations between elements of SDL in MOOCs (Beaven et al, 2014; Kop & Fournier, 2011; Terras & Ramsay, 2015). Despite the substantial increase in universities offering MOOCs (Bonk et al, 2015; Shah, 2019), especially in the Global South (Zhang et al, 2020), most MOOC empirical studies focus on the students’ motivation and completion rates (Zhu et al, 2018). Consequently, research on the design of MOOCs to facilitate self-monitoring from the instructor’s perspective is rare. In addressing this gap, this study investigates instructor perceptions and practices of designing and delivering MOOCs to facilitate self-monitoring.

A sequential mixed methods design (Creswell & Plano-Clark, 2017; Fraenkel & Wallen, 2009) was used in this study. First, quantitative data collection and analysis was conducted. That was followed by qualitative data collection and analysis (Creswell & Plano-Clark, 2017). The three main data sources in this study included: (1) an online survey with 198 valid responses; (2) semi-structured interviews with 22 voluntary instructors; and (3) course reviews of the MOOCs designed by the 22 interviewees. Different data sources serve the purpose of data triangulation (Patton, 2002).

Online Survey

The authors developed a survey by adapting and modifying an instrument developed by Fisher and King (2010) and Williamson (2007), which was based on the theoretical framework of Garrison (1997). First, the authors conducted semi-structured interviews with four MOOC instructors concerning the design and delivery of MOOCs for self-monitoring, self-management, and motivation; in effect, the interviews were designed to directly target the key aspects of SDL. These interviews led to a pilot survey with 48 MOOC instructors to design the survey instrument (Zhu & Bonk, 2019b). The final survey consisted of 29 questions, including 20 five-point, Likert-scale questions with seven items related to self-monitoring strategies (e.g., learners’ goal setting, self-evaluation, responsibility of learning, learning beliefs, and so on), three closed-ended questions (e.g., their perceptions of SDL, including self-monitoring in MOOCs), and six questions asking about different demographic-related items. A Cronbach alpha was conducted in SPSS to check the reliability of the survey. The result of this statistical analysis for self-monitoring was 0.76, which was quite acceptable.

MOOC Instructor Interviews

The interview protocol had 12 questions related to MOOC design and SDL as well as two questions concerning MOOC instructor backgrounds and experiences in designing MOOCs. The interviewees were selected based on four criteria. First, they had to volunteer to be interviewed by providing their contact email at the end of the survey. Second, the volunteers had to indicate in the survey that they considered students’ SDL skills in their MOOC design and delivery phases. Third, their mean scores for the five-point, Likert-scale questions had to be higher than 2.5. Fourth, the researchers attempted to select potential MOOC instructor interviewees who represented a diverse array of countries and MOOC subject areas or topics addressed. Also considered was previous experience with online or blended learning, prior MOOC teaching experience, MOOC format (i.e., instructor-led with teaching support, instructor-led without teaching support, self-paced, etc.), and MOOC providers or platforms. In effect, the sampling was highly strategic in nature so as to obtain a diverse pool of MOOC instructors which could yield quite varied SDL experiences.

Employing the above criteria, 22 MOOC instructors were selected for the interviews. Based on the work of Guest, Bunce, and Johnson (2006), in such non-probabilistic sampling interviews, saturation tends to occur within the first twelve interviews. Stated another way, the twenty-two interviews employed here went well beyond the data saturation point. The MOOC instructor interviewees’ institutions were from the following countries: the US (n = 9), the UK (n = 6), Australia (n = 3), France (n = 1), Belgium (n = 1), the Netherlands (n = 1), and Israel (n = 1). While definitely not a comprehensive assembly of countries, such sampling procedures allowed the researchers to begin to learn how SDL in MOOCs might be viewed and implemented in different regions of the world.

The interviewees also represented a diverse array of expertise and previous online experience. As an example, Emma from the US taught a MOOC in the area of literacy and language science using Coursera. At the time of the interview, she had just taught this one MOOC and it was self-paced. Similarly, Mason, from Australia, had only one MOOC teaching experience, but, unlike Emma, he had teaching assistant support. His MOOC, which also employed Coursera, was in education. Fernando, from Belgium, on the other hand, taught research methods three times via MOOCs using the Blackboard platform. Importantly, just like Mason, he also had teaching assistant support during his MOOC offering. In contrast, both Andrew and Emily in the UK used the FutureLearn platform to deliver multiple MOOCs with teaching assistance. Andrew had delivered three previous MOOCs in the field of art, whereas Emily had completed two MOOCs in the field of medicine and health. Those are just five examples of the 22 volunteer interviewees who each had highly interesting MOOC experiences and personal stories.

To standardize the process and increase the interview efficiency, the first author shared the interview protocol with interviewees to better prepare them before their interview. In addition, the first author conducted a review of each interviewee’s MOOC to support the interview conversation. Interviews were conducted and recorded through Zoom, a synchronous meeting tool. Each interview lasted around 30 to 60 minutes. The total interview time was 828 minutes. The interview data started to reach a saturation point after about the first 15 interviews but several more were conducted to gather data from more countries and teaching backgrounds. The interviews, in fact, ended after obtaining 22 participants, given that limited new information was being added at this point in the interview process (Creswell & Plano-Clark, 2017; Merriam, 1988, 2009).

To increase the trustworthiness of the study, member checking was conducted with interviewees to check the accuracy of the transcripts. Ten out of 22 interviewees provided detailed revision (e.g., misspelling, corrections), while the rest of the interviewees reported that the transcript that they were sent was accurate. In addition, two interviewees shared their research papers on MOOC-related teaching with the researcher as supplementary materials. A research log was created to track and reflect on the interview and research process. Moreover, a $20 Amazon gift card was offered to all the interviewees in appreciation of their interview and member-checking time.

Document Analysis

The actual MOOCs designed or taught by the interviewees were analyzed by the first author. She reviewed the course in terms of learning resources, activities, and assessments provided in MOOCs, both before as well as after the instructor interview. This document analysis phase that was employed helped to triangulate the data and, thereby, increase the trustworthiness or validity of the study.

Data Analysis

For quantitative data from the survey, the data was analyzed using descriptive statistics such as mean, frequency, and percentage in SPSS and Excel. These quantitative results are reported in the next section.

Regarding the qualitative data, a classical content analysis was conducted in NVivo 12. Following member checking, the researchers utilized classical content analyses to abductively analyze the data (Leech & Onwuegbuzie, 2007). The unit of analysis in this study was the meaning unit. To perform an abductive content analysis, the first author had a general self-monitoring concept and research questions in mind. Accordingly, she read through the entire dataset, chunked the data into smaller meaningful parts, labeled each chunk with a code, and compared each new chunk of data with previous descriptions. After data coding, the lead researcher categorized the codes by similarity into themes.

The number of the survey participants in this study was 198. The participants in this study had diverse disciplinary backgrounds including social science, medicine and health, language and literacy, business and management, etc. Among these 198 participants, 102 (51.5%) did not have any online or blended course design and teaching experience prior to designing their initial MOOC. In terms of MOOC design and teaching experience, 118 participants (59.6%) had designed or taught only one MOOC. What seems apparent from these findings is that most study participants did not have extensive MOOC or online learning design and teaching experiences.

Research Question #1: How Do MOOC Instructors Design and Deliver Their MOOCs to Facilitate Students’ Self-Monitoring Skills?

Survey Results

Survey participants of this study (n = 198) ranked on a scale of 1 (Strongly disagree) to 5 (Strongly agree) whether the design and delivery of their MOOC helps the students to develop self-monitoring skills on various elements. For instance, a majority of participants reported that the design and delivery of their MOOCs helped students to be in control of their learning (M = 4.15; SD = 0.55), followed by “helps the student be responsible for his/her learning” (M = 4.06; SD = 0.79), “helps the student be able to find out information related to learning content for him/herself” (M = 4.02; SD = 0.70), “helps the student evaluate his/her own performance” (M = 3.94; SD = 0.78), “helps the student be able to focus on a problem” (M = 3.87; SD = 0.74), “helps the student have high beliefs in his/her abilities of learning” (M = 3.73; SD = 0.74). However, the statement “helps the student set his/her own learning goals” was rated the lowest (M = 3.68; SD = 0.91) among seven items.

Interview Results

MOOC instructors reported that they facilitated students’ self-monitoring in a variety of ways from helping students with internal self-feedback to providing external feedback. Internal feedback included students’ cognitive and metacognitive processes. Cognitive processing was related to self-observation, self-judgment, and self-reaction. Metacognitive processing is related to self-reflection and thinking critically. External feedback included that obtained from MOOC instructors and their teaching assistants as well as from peers enrolled in the MOOC.

Facilitate student internal feedback. MOOC instructors fostered the cognitive learning processes of participants related to self-monitoring in myriad ways. For instance, they accomplished this through quizzes for self-assessment, tutorials on technology use, navigational aids for the course, supplemental resources, and instructional modeling. In this study, among 22 interviewees, 13 mentioned that they used quizzes or tests for student self-assessment. Lucas, a social science instructor stated: “I do think frequent quizzes and somewhat lengthy quizzes are really helpful ... It makes the whole thing hang together as a unit. So, I gave little quizzes at the end of my videos.”

Besides facilitating cognitive processes, MOOC instructors also facilitated students’ metacognitive processes while designing and delivering MOOCs. In terms of metacognition, the interviewees reported that they encouraged students to reflect and think critically through reflection questions. Among 22 interviewees, five had self-reflection questions embedded in the MOOC. A science instructor from the US, Samuel, utilized weekly questions to foster self-monitoring and reflection in his MOOC. As he stated:

We do ask, kind of, a summary discussion question at the end of the week. I'll ask: “What did you learn? How do you feel about that? How would this apply to a real world application?” So, we asked those kind[s] of reflection questions.

Provide external feedback to help students’ self-monitoring. In addition to internal feedback, MOOC instructors also mentioned that they provided external feedback to help student self-monitoring. The external feedback was primarily from MOOC instructors, teaching assistants (TAs), and student peers.

Six out of 22 interviewees mentioned that they or their TAs provided feedback to students to assist in monitoring their learning. In addition, Joseph from the UK provided feedback through panels or lectures. As Joseph explained:

We have [a] discussion moderator, who was also in that space talking to students. So, we try to engage students on some of those points, and question some of the things that they're saying. Maybe get them to reflect.

Interestingly, 12 out of 22 MOOC instructors had TAs in their MOOCs; in such courses, the TAs often helped provide feedback to students. For instance, the business instructor, Ethan, asked his TA to provide feedback to students on a discussion board. As Ethan explained:

We do have a tutor, who monitors the discussion boards and looks if any inquiries, or anything else comes in. But, I think her [TA] time is restricted to half a day a week. Her work has a lot of monitoring. And [she] follows up with all sorts of things.

Thirteen out of 22 interviewees adopted peer-assessment to help students’ self-monitoring. They viewed self-monitoring as a social process which involves interaction with others. Peer-assessment was considered beneficial for the learners being assessed as well as those conducting the assessment (Barak & Rafaeli, 2004; Dochy, Segers & Sluijsmans, 1999). In peer assessment, students not only obtain feedback from others, but also help self-reflection through providing feedback to peers. For example, Emma, encouraged learners to provide feedback to their peers. As she observed:

We also put in peer evaluation because the interaction between students would motivate them. We give a very, very basic syllabus because we don't know what the educational background and the levels of the students. We gave them five different key points to enable them to evaluate other students on assignment.

MOOC Review Results

Through our review of the MOOCs of the 22 instructors who were interviewed, it was apparent that the design and delivery of MOOCs facilitated students’ self-monitoring through their internal cognitive and metacognitive processes as well as various external supports. For example, quizzes, providing introductions, aids to help with course navigation, progress bars, and optional resources were used to help foster cognitive processes. In addition, to foster engagement as well as the metacognitive processes, these MOOC instructors encouraged learners to participate in discussion forums and attempted to build a sense of a learning community.

Facilitate student self-monitoring. As mentioned before, practice quizzes with immediate feedback were provided for students’ self-assessment. Whereas, some of the quizzes were independent tasks, others were embedded in MOOC videos (see Figure 1). Once a quiz was finished, students could obtain immediate automatic feedback and brief comments.

Figure 1: Example of the quizzes embedded in videos used in MOOCs.

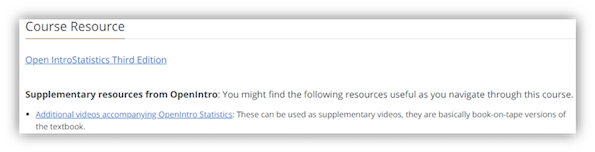

To help students monitor their learning process, a progress bar was often employed in the MOOCs we analyzed. Additionally, optional reading materials or additional learning resources were used to help students make decisions on their own based on their learning situations (see Figure 2). With these resources, students could monitor their own learning progress and choose learning materials that fit their particular needs and preferred processes.

Figure 2: Example of the supplementary resources used in MOOCs.

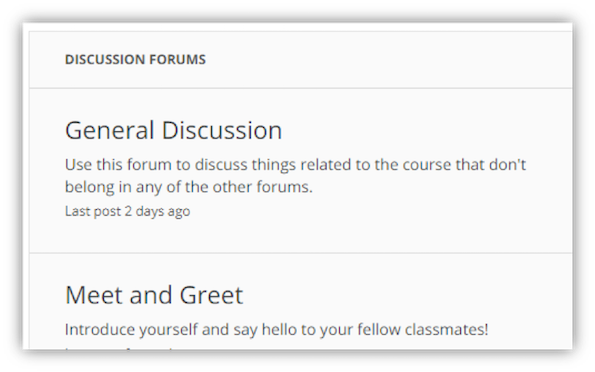

In addition, as is often found in smaller online and blended learning situations, discussion forums were commonly incorporated into the 22 MOOCs that we evaluated, at least, in part, for instances of students’ metacognitive processing. It was in these discussion forums and related resources that the MOOC instructors encouraged learners to self-reflect, share ideas, and build learning communities (see Figure 3).

Figure 3: Example from discussion forum used for building a learning community.

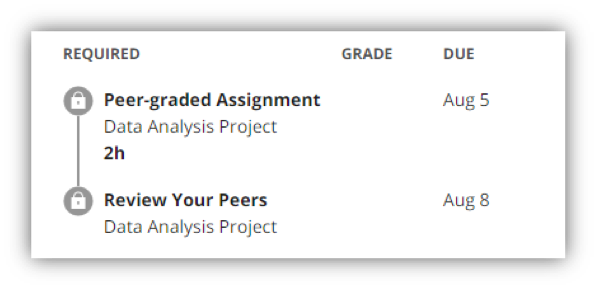

Provide external feedback for students’ self-monitoring. In conjunction with interview results, the MOOC review results demonstrated that MOOC instructors and TAs facilitated the discussion forums by addressing students’ confusion and encouraging peer-assessment (see Figure 4). Such external feedback might help students with their self-monitoring in learning.

Figure 4: Example of tool for peer-assessment in MOOCs.

Research Question #2: How Are Various Technologies Employed to Support Learners’ Self-Monitoring Skills?

Technologies play a vital role in MOOCs. MOOC instructors reported that a variety of technologies were used to facilitate students’ self-monitoring such as synchronous communication technologies, asynchronous communication technologies, and feedback tools. Follow-up research might manipulate different features or components with the intention of nurturing specific self-monitoring skills and behaviors.

Synchronous Communication Technologies

Synchronous communication technologies such as Google Hangouts and YouTube Live were used to host meetings with students. Instructors employing such tools reported that synchronous technologies can foster social interaction between instructors and students, which can further foster students’ self-monitoring. Ashley, for example, used YouTube Live to stream her lectures online and answer participant questions. Similarly, Fernando from Belgium stated that: “We have tried to create some kind of interaction. Like what I was saying, through Google Hangout[s], we let them communicate with each other, but also ask questions to this specific professor.”

Asynchronous Communication Technologies

Besides synchronous technologies, asynchronous communication technologies such as discussion forums, blogs, Padlet, Slackbot, and various social media were utilized to connect MOOC students for interactive discussions as well as to build learning communities. A social interaction environment might elevate student self-monitoring and The Economistfoster their motivation. Discussion forums for asynchronous conferencing were commonly provided within the MOOC platform. Logan from the US stated that: “We also have a blog. We're posting things on to the blog, and helping them, helping keep it fresh.” Lucas from the US described how his students used discussion forums, “there was a lot of activity on the discussion boards. Some of the people were clearly not only following very closely, but also doing outside reading and bringing a wealth of knowledge to this [discussion].”

Feedback Tools

Aligned with the findings mentioned above, feedback is critical for student SDL. Technologies for formative and summative assessment were used to help students’ self-monitoring. As an example, Andrew from the UK employed learning analytics to monitor students’ learning and continue to improve his MOOC. Andrew noted that this additional data from learning analytics was helpful in restructuring his MOOC for a better pace of activities and resulting learner performance.

Recap of Self-Monitoring Tools and Techniques Employed

In sum, to support learners’ self-monitoring, the following strategies could be used in practice: (1) helping students set their own learning goals, (2) building a learning community, (3) offering immediate feedback, (4) embedding quizzes for self-assessment, (5) providing progress indicators, (6) including reflection questions, and (7) making available optional learning materials.

There were at least three key limitations of this research that future MOOC researchers and interpreters of this study should be aware of. First, MOOC instructor information was collected primarily from several key MOOC providers such as Coursera, FutureLearn, and edX. However, MOOCs that were not offered in English, like XuetangX, were typically not included. Hence, there is an English language bias. In addition, while the survey response rate was acceptable for an opt-in survey (Cho & LaRose, 1999), it was just 10%; this study would have had more powerful findings if the response rate had been double or triple what we encountered. Nevertheless, the response rate was much higher (18%) when only those opening the email invitation were considered. Naturally, higher response rates would have provided more robust data from which to draw conclusions about self-monitoring and other SDL skills and competencies. Finally, this study only examined MOOC instructors’ strategies used to facilitate student self-monitoring; we did not confirm whether the strategies reported by the MOOC instructor participants in this study were actually effective for their learners. As noted below, we are currently addressing this final limitation in a follow-up study.

This study examined the design and delivery of MOOCs to facilitate students’ self-monitoring skills. Despite the limited MOOC design and teaching experiences of the MOOC instructor participants, their previous traditional classroom teaching experience as well as their blended or online teaching experience played a role in informing their MOOC design to facilitate student self-monitoring. The results of this study indicated that MOOC instructors facilitated students’ self-monitoring through both internal feedback and external feedback. The internal feedback was related to their cognitive and metacognitive processing, which included monitoring their learning strategies and thinking about their thinking (Garrison, 1997).

These instructors relied on many techniques to foster SDL. For instance, to facilitate learners’ cognitive learning processes, strategies, such as quizzes for self-assessment, progress indicators, tutorials on technology use, learning tips, navigational aids for the course, instructional modeling and various other resources and supports, were reported by MOOC instructors. Important to this study, self-assessment and progress indicators encouraged learners to review and monitor their learning process. Such results corresponded with the findings reported a few years ago by Kulkarni et al (2013). Given these consistencies in the findings, now is the time to take the next step and create MOOC instructor and instructional designer training programs and instructional design templates based on these results. Clearly, there are many ways to operationalize the results revealed here.

Regarding how to facilitate metacognitive processing in this relatively new form of educational delivery, MOOC instructors encouraged students to think critically by providing reflection questions and assistance in building a learning community. This finding is in line with the research implications noted by Parker et al (1995), who discovered that encouraging reflection can improve learners’ SDL skills. Similarly, scholars such as Schraw (1998) have argued that reflection is crucial in building student metacognitive knowledge and self-monitoring skills. Likewise, Boud, Keogh and Walker (2013) emphasized the importance of using reflection to transfer the learning experience to novel settings and situations.

External feedback motivates students as well as helps them with their self-monitoring. The participants in this study reported that the feedback from MOOC instructors and TAs can help MOOC learners identify key issues. Furthermore, research indicates that peer-assessment can benefit both feedback providers and feedback receivers (Barak & Rafaeli, 2004; Dochy et al, 1999). Future MOOC research initiatives in this area might explore how different forms of feedback can have facilitative effects on learner SDL skills and competencies both in MOOCs and in other types of formal and informal educational delivery.

The second primary focus of this study related to the use of technology to facilitate self-monitoring. As indicated earlier, MOOC instructors leveraged a variety of technologies to facilitate self-monitoring. These technologies included: (1) synchronous communication technologies, (2) asynchronous communication technologies, and (3) feedback tools. Additional research might attempt to identify what aspects of these technologies are the most beneficial for SDL when enrolled in MOOCs.

Each of these diverse technologies served different purposes. First, the results indicated that these technologies support creating a learning community. MOOC instructors stated that synchronous technologies such as Google Hangouts and YouTube Live, as well as asynchronous communication technologies, such as discussion forums, blogs, and social media, served as communication technologies that could support students’ social learning. These results were in line with the findings of Blaschke (2012) and Junco, Heiberger, and Loken (2010) who found that using social media supported student SDL. In addition, the findings supported Candy’s (1991) idea that SDL is realized in collaboration and interaction. Suffice it to say, the surveys, interviews, and content analyses shed light into key aspects of learner SDL skills and processes when enrolling in MOOCs.

The findings of this study provide many useful insights for instructors and instructional designers into MOOC design and delivery to facilitate student self-monitoring for SDL. In addition, various technology tools and features that supported self-monitoring were identified. As such, software designers and programmers, as well as others involved in MOOC platform development and associated funding, might also benefit from this research. The findings of this study also offer implications for educators designing programs to enhance MOOC retention and completion rates, albeit, indirectly.

This study was just the first step in the process of developing the tools and techniques for enhancing learner SDL. As MOOCs proliferate and become more widely accepted around the world (Bonk et al, 2015), especially in the developing world where many millions of people are first getting access to higher education (Zhang et al, 2020), many more studies in this area are now desperately needed. As part of such efforts, we plan to expand the current research study with additional MOOC instructor participants. At present, we are in the midst of another in-depth study examining students’ perceptions of effective self-monitoring strategies to, we hope, verify as well as expand upon the strategies mentioned by the instructors participating in this study. Importantly, the study results thus far confirm as well as extend the findings detailed here.

From what we have witnessed during the past decade, there is little chance of MOOCs abating in the near future. In fact, MOOCs have expanded to more than 100 million learners enrolling in over 11,000 MOOCs in 2018 alone (Shah, 2019). The time is ripe, therefore, for investigating whether cognitive and metacognitive processes needed in MOOCs can be enhanced and whether such skill enhancements might transfer to other learning-related settings and situations. In effect, the goal is for self-monitoring skills to transfer to other formal as well as informal learning environments and situations; especially as additional forms of open and distance learning emerge and evolve.

Clearly, much concerted effort needs to be undertaken; albeit at the governmental, institutional, programmatic, or individual researcher level. For instance, MOOC researchers might want to explore different forms of instructional scaffolds and supports for SDL, whereas, educators might want to design and evaluate innovative training programs for SDL in this age of massively open online teaching and learning. Designers of MOOC platforms as well as MOOC vendors might evaluate MOOC retention and completion rates resulting from the introduction of new technology tools and features for self-monitoring, self-management, and motivation. Government and non-profit agencies might provide seed money for such efforts as well as guidance on research gaps and possible SDL research methods to address those areas through reports, conferences and forums, and assorted high-profile initiatives. Given that the second half of humanity is now obtaining access to Internet-based forms of learning (The Economist, 2019), the understanding and advancement of SDL skills may become one of the most important challenges and opportunities of those living in the twenty-first century. Whatever the direction, the future is sure to be exciting!

Barak, M., & Rafaeli, S. (2004). On-line question-posing and peer-assessment as means for web-based knowledge sharing in learning. International Journal of Human-Computer Studies, 61(1), 84-103. https://doi.org/10.1016/j.ijhcs.2003.12.005

Beaven, T., Codreanu, T., & Creuzé, A. (2014). Motivation in a language MOOC: Issues for course designers. Retrieved from https://www.degruyter.com/downloadpdf/books/9783110422504/9783110422504.4/9783110422504.4.pdf

Blaschke, L. M. (2012). Heutagogy and lifelong learning: A review of heutagogical practice and self-determined learning. The International Review of Research in Open and Distributed Learning, 13(1), 56-71. doi: 10.19173/irrodl.v13i1.1076

Bonk, C. J. (2009). The world is open: How Web technology is revolutionizing education. San Francisco, CA: Jossey-Bass.

Bonk, C. J., Lee, M. M., Reeves, T. C., & Reynolds, T. H. (Eds.). (2015). MOOCs and open education around the world. New York: Routledge.

Boud, D., Keogh, R., & Walker, D. (2013). Reflection: Turning experience into learning. New York, NY: Routledge.

Brockett, R. G., & Hiemstra, R. (1991). Self-direction in adult learning: Perspectives on theory, research, and practice (Vol. 20). London: Routledge.

Candy, P. C. (1991). Self-direction for lifelong learning. A comprehensive guide to theory and practice. Jossey-Bass, San Francisco, CA.

Chang, M. M. (2007). Enhancing web-based language learning through self-monitoring. Journal of Computer Assisted Learning, 23(3), 187-196. doi: 10.1111/j.1365-2729.2006.00203.x

Cho, H., & LaRose, R. (1999). Privacy issues in Internet surveys. Social Science Computer Review, 17(4), 421-434. https://doi.org/10.1177/089443939901700402

Coleman M. C., & Webber J. (2002). Emotional and behavioral disorders. Boston, MA: Pearson Education Company.

Creswell, J. W., & Plano-Clark, V. L. (2017). Designing and conducting mixed methods research (3rd ed.). Thousand Oaks, CA: Sage.

Delclos, V. R., & Harrington, C. (1991). Effects of strategy monitoring and proactive instruction on children's problem-solving performance. Journal of Educational Psychology, 83(1), 35. Retrieved from https://psycnet.apa.org/buy/1991-19728-001

Dochy, F. J. R. C., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education, 24(3), 331-350. https://doi.org/10.1080/03075079912331379935

The Economist. (2019, June 8). The second half of humanity is joining the Internet: They will change it, and it will change them. The Economist. Retrieved from https://www.economist.com/leaders/2019/06/08/the-second-half-of-humanity-is-joining-the-internet

Fisher, M. J., & King, J. (2010). The self-directed learning readiness scale for nursing education revisited: A confirmatory factor analysis. Nurse Education Today, 30(1), 44-48. doi:10.1016/j.nedt.2009.05.020

Fraenkel, J. R., & Wallen, N. E. (2009). The nature of qualitative research. How to design and evaluate research in education (7th ed).Boston: McGraw-Hill, 420.

Garrison, D. R. (1997). Self-directed learning: Toward a comprehensive model. Adult Education Quarterly, 48(1), 18-33. Retrieved from http://journals.sagepub.com/doi/pdf/10.1177/074171369704800103

Guest, G., Bunce, A., & Johnson, L. (2006). How many interviews are enough? An experiment with data saturation and variability. Field Methods, 18(1), 59-82. doi: 10.1177/1525822X05279903

Hewitt-Taylor, J. (2001). Self-directed learning: Views of teachers and students. Journal of Advanced Nursing, 36(4), 496-504. https://doi.org/10.1046/j.1365-2648.2001.02001.x

Junco, R., Heiberger, G., & Loken, E. (2011). The effect of Twitter on college student engagement and grades. Journal of Computer Assisted Learning, 27(2), 119-132. doi: 10.1111/j.13652729.2010.00387.x

Kell, C., & Deursen, R. V. (2002). Student learning preferences reflect curricular change. Medical Teacher, 24(1), 32-40. doi: 10.1080/00034980120103450

Kop, R., & Fournier, H. (2011). New dimensions to self-directed learning in an open networked learning environment. International Journal for Self-Directed Learning, 7(2), 1-19. Retrieved from https://docs.wixstatic.com/ugd/dfdeaf_b1740fab6ad144a980da1703639aeeb4.pdf

Kulkarni, C., Wei, K. P., Le, H., Chia, D., Papadopoulos, K., Cheng, J., & Klemmer, S. R. (2013). Peer and self-assessment in massive online classes. ACM Transactions on Computer-Human Interaction, 20(6), 33. doi: http://dx.doi.org/10.1145/2505057

Lan, W. Y. (1996). The effects of self-monitoring on students' course performance, use of learning strategies, attitude, self-judgment ability, and knowledge representation. The Journal of Experimental Education, 64(2), 101-115. Retrieved from https://www.jstor.org/stable/20152478

Leech, N. L., & Onwuegbuzie, A. J. (2007). An array of qualitative data analysis tools: A call for data analysis triangulation. School Psychology Quarterly, 22(4), 557. doi: 10.1037/1045-3830.22.4.557

Loizzo, J., Ertmer, P. A., Watson, W. R., & Watson, S. L. (2017). Adult MOOC learners as self-directed: Perceptions of motivation, success, and completion. Online Learning, 21(2). doi: 10.24059/olj.v21i2.889

Lunyk-Child, O. I., Crooks, D., Ellis, P. J., Ofosu, C., & Rideout, E. (2001). Self-directed learning: Faculty and student perceptions. Journal of Nursing Education, 40(3), 116-123. doi: 10.3928/0148-4834-20010301-06

Maag, J. W., Rutherford Jr., R. B., & Digangi, S. A. (1992). Effects of self-monitoring and contingent reinforcement on on-task behavior and academic productivity of learning disabled students: A social validation study. Psychology in the Schools, 29(2), 157-172. https://doi.org/10.1002/1520-6807(199204)29:2<157::AID-PITS2310290211>3.0.CO;2-F

Malone, L. D., & Mastropieri, M. A. (1991). Reading comprehension instruction: Summarization and self-monitoring training for students with learning disabilities. Exceptional Children, 58(3), 270-279. https://doi.org/10.1177/001440299105800309

Margaryan, A., Bianco, M., & Littlejohn, A. (2015). Instructional quality of Massive Open Online Courses (MOOCs). Computers & Education, 80, 77-83. doi:10.1016/j.compedu.2014.08.005

Merriam, S. B. (1988). Case study research in education: A qualitative approach. San Francisco, CA: Jossey-Bass.

Merriam, S. B. (2001). Andragogy and self-directed learning: Pillars of adult learning theory. New Directions for Adult and Continuing Education, 2001(89), 3-14. doi: 10.1002/ace.3

Merriam, S. B. (2009). Qualitative research: A guide to design and implementation. San Francisco, CA: Jossey-Bass.

Parker, D. L., Webb, J., & D'Souza, B. (1995). The value of critical incident analysis as an educational tool and its relationship to experiential learning. Nurse Education Today, 15(2), 111-116. doi: 10.1016/S0260-6917(95)80029-8

Patton, M. Q. (2002). Two decades of developments in qualitative inquiry: A personal, experiential perspective. Qualitative Social Work, 1(3), 261-283. https://doi.org/10.1177/1473325002001003636

Prociuk, J. L. (1990). Self-directed learning and nursing orientation programs: Are they compatible? The Journal of Continuing Education in Nursing, 21(6), 252-256. doi:10.3928/0022-0124-19901101-07

Ross, J., Sinclair, C., Knox, J., & Macleod, H. (2014). Teacher experiences and academic identity: The missing components of MOOC pedagogy. MERLOT Journal of Online Learning and Teaching, 10(1), 57. Retrieved from http://jolt.merlot.org/vol10no1/ross_0314.pdf

Schraw, G. (1998). Promoting general metacognitive awareness. Instructional Science, 26(1-2), 113-125. Retrieved from https://link.springer.com/article/10.1023/A:1003044231033

Schunk, D. H. (1982). Progress self-monitoring: Effects on children’s self-efficacy and achievement. The Journal of Experimental Education, 51(2), 89-93. https://doi.org/10.1080/00220973.1982.11011845

Shah, D. (2017). MOOCs find their audience: Professional learners and universities. Class Central. Retrieved from https://www.classcentral.com/report/moocs-find-audience-professional-learners-universities/

Shah, D. (2019). Year of MOOC-based degrees: A review of MOOC stats and trends in 2018. Class Central. Retrieved from https://www.class-central.com/report/moocs-stats-and-trends-2018/

Terras, M. M., & Ramsay, J. (2015). Massive open online courses (MOOCs): Insights and challenges from a psychological perspective. British Journal of Educational Technology, 46(3), 472-487. doi: 10.1111/bjet.12274

Watson, S. L., Loizzo, J., Watson, W. R., Mueller, C., Lim, J., & Ertmer, P. A. (2016). Instructional design, facilitation, and perceived learning outcomes: An exploratory case study of a human trafficking MOOC for attitudinal change. Educational Technology Research and Development, 64(6), 1273-1300. doi:10.1007/s11423-016-9457-2

Williamson, S. N., (2007). Development of a self-rating scale of self-directed learning. Nurse Researcher, 14(2), 66–83. Retrieved from https://search.proquest.com/openview/c7980aea8ee20b570c57e9102cf5b9ea/1?pq-origsite=gscholar&cbl=33100

Zhang, K., Bonk, C. J., Reeves, T. C., & Reynolds, T. H. (2020). MOOCs and Open Education in the Global South: successes and challenges. In K. Zhang, C. J. Bonk, C. Reeves, & T. H. Reynolds, T. H. (Eds.), MOOCs and Open Education in the Global South: Challenges, successes, and opportunities. NY: Routledge.

Zhu, M., & Bonk, C. J. (2019a). Designing MOOCs to facilitate participant self-monitoring for self-directed learning. Online Learning, 23(4), 106-134. doi:10.24059/olj.v23i4.2037.

Zhu, M., & Bonk, C. J. (2019b). Designing MOOCs to facilitate participant self-directed learning: An analysis of instructor perspectives and practices. International Journal of Self-Directed Learning, 16(2), 39-60. Retrieved from: http://publicationshare.com/pdfs/Designing-MOOCs-for-SDL.pdf

Zhu, M., Sari, A., & Lee, M. M. (2018). A systematic review of research methods and topics of the empirical MOOC literature (2014–2016). The Internet and Higher Education, 37, 31-39. https://doi.org/10.1016/j.iheduc.2018.01.002

Zimmerman B. J. (1995). Self-efficacy and educational development. In A. Bandura (Ed.), Self-efficacy in changing societies (pp. 202-231). Cambridge University Press, New York.

Zimmerman, B. J., & Paulsen, A. S. (1995). Self-monitoring during collegiate studying: An invaluable tool for academic self-regulation. New Directions for Teaching and Learning, 1995(63), 13–27. https://doi.org/10.1002/tl.37219956305

Authors:

Meina Zhu is an Assistant Professor in the Learning Design and Technology program in the College of Education at Wayne State University. Her research interests include online education, MOOCs, self-directed learning, STEM education, and active learning. Email: meinazhu@wayne.edu

Curtis. J. Bonk is a Professor of Instructional Systems Technology at Indiana University and author/editor of a dozen books in the field of educational technology and e-learning. He is a passionate and energetic speaker, writer, educational psychologist, instructional technologist, and entrepreneur as well as a former CPA/corporate controller (homepage: http://curtbonk.com/). Email: cjbonk@indiana.edu

Cite this paper as: Zhu, M., & Bonk, C.J. (2020). Technology tools and instructional strategies for designing and delivering MOOCs to facilitate self-monitoring of learners. Journal of Learning for Development, 7(1), 31-45.