VOL. 4, No. 2

Abstract: Open University Malaysia (OUM) is progressively moving towards implementing assessment on demand and online assessment. This move is deemed necessary for OUM to continue to be the leading provider of flexible learning. OUM serves a very large number of students each semester and these students are vastly distributed throughout the country. As the number of learners keeps growing, the task of managing and administering examinations every semester has become increasingly laborious, time consuming and costly. In trying to deal with this situation and improve the assessment processes, OUM has embarked on the development and employment of a test management system. This test management system is named OUM QBank. The initial objectives of QBank development were aimed at enabling the systematic classification and storage of test items, as well as the auto-generation of test papers based on the required criteria. However, it was later agreed that the QBank should be a more comprehensive test management system that manages not just all assessment items but also includes the features to facilitate quality control and flexibility of use. These include the functionality to perform item analyses and also online examination. This paper identifies the key elements and the important theoretical basis in ensuring the design and development of an effective and efficient system.

One key feature of ODL institutions is the provision of flexible learning. The flexibility to learn in terms of time and locality is probably one main reason that makes ODL institutions a preferred choice of learning for working people and adult learners. There has been a rapid increase in the learner population in many such institutions. With the growth of the number of learners, the task of administering formal assessments such as developing items, maintaining item quality and conducting tests and examinations becomes tedious and laborious. In fact, the administrative processes of assessment and evaluation can become a nightmare for ODL institutions (Okonkwo, 2010). The same issue is faced by Open University Malaysia (OUM), which started operation with only a few hundred learners but, in a period of just over a decade, the accumulated learners’ population has surpassed 150,000. As learner population keeps increasing, the administration of examinations for each semester has become increasingly laborious, time consuming and costly. Every semester the assessment department of OUM faces the challenge of managing and conducting examinations for more than 25,000 students at 37 OUM learning centres throughout the country. In every semester, new sets of examination papers are set. The process involves identifying and engaging qualified subject matter experts to prepare examination questions and marking schemes. This creates a challenge for OUM in making sure there is consistency in the quality of the examination papers prepared. The task of reviewing and moderating these examination questions and marking schemes is time consuming as well. Printing and delivery of examinations to the various examination centres and collecting and sending back the answer scripts to the examination department also becomes quite costly and challenging. Additional measures have to be taken to ensure security of the delivery of the examination papers as well as the answer scripts, to avoid any form of leakage. Scheduling and administering the examinations must be done carefully and efficiently as well.

The challenge of managing assessment and administering examinations to the masses is clearly not unique to OUM. Anadolu University, for example, serves about a million of students throughout the region, and the university made the move to create a test bank to house all the exam items created for the courses offered. Students can prepare for their examinations by completing practice tests online (Multu, Erorta, & Yilmaz, 2004). Exam booklets and optically readable answer sheets are printed and distributed with additional security measures to all the centers where the examinations are administered (Latchem, Ozkul, Aydin, & Mutlu, 2006). There is clearly a need to leverage technology to minimize the issues and challenges related to assessment management and administration. OUM requires a test management system that not only helps minimize the manual processes involved in administering assessments, but also ensures the quality of the examination papers generated. In addition, the test management system includes functionalities for online examination that will support flexible entry and exit. This paper provides a detailed description of the design and development process of the OUM test management system, also named as OUM QBank system.

A test management system is not a new concept. It has long been advocated as a possible tool for managing effective and efficient tests and examinations (Choppin, 1976). Nevertheless, the traditional item banking systems are more of a basic test items storage system. According to Estes (1985) these systems support the mass storage and easy selection and retrieval of items used as examination questions. There was little emphasis on automating the generation of tests and also on the test quality control process. With the advancement of technology related to item banking development, it is now possible for learning institutions to develop more comprehensive test management systems that have much additional functionality, besides basic systematic storage and retrieval. Besides automation of processes, the important functionalities should include the capability of the system to ensure the quality and consistency of test papers generated.

OUM QBank was designed with the main objective of reducing the laborious manual process of examination or test items preparation and administration and to ensure the quality of examination papers prepared. To achieve the objective, the design of an effective and efficient test management system should have the following unique features:

i) Systematic Storage Structure

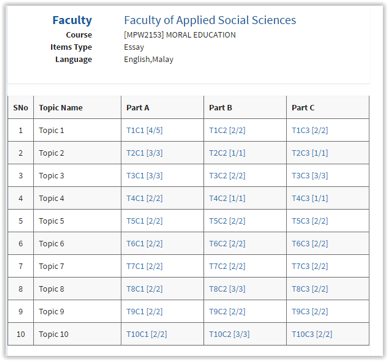

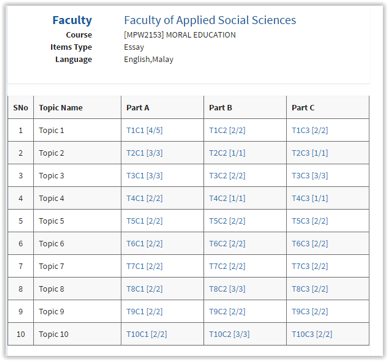

There are three kinds of assessment items to be stored in an OUM QBank. These items are: essay-type test items, multiple choice Question (MCQ items) and items in the form of assignment tasks. For the essay-type items and MCQ items, the storage is structured to categorize items based on the subject, topic and cognitive levels of difficulty. Figure 1 illustrates the structure of QBank item storage. The storage consists of 30 storage cells with each cell specified by topic and cognitive level, where T1C1 [4/5] indicates Topic 1 Cognitive Level Low [4 items have been approved out of 5].

Figure 1: QBank Item Storage

ii) Item Entry Interface

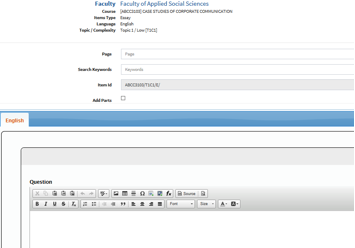

OUM QBank is designed to provide a user-friendly interface for easy entry of items. Figure 2 shows a screen shot of the item-entry interface. The interface design allows the user to type items directly so as to be saved into the system. Alternatively the user may prepare the items in Microsoft Word and use the normal copy-paste method to deposit items into the system.

Figure 2: Item-Entry Interface

iii) Table of Specification

A table of specification serves as a guide in the preparation of examination questions. It helps to ensure distribution of topics and level of difficulty of an examination paper. Therefore, the use of a table of specification is an important step in the preparation of an examination paper. The table of specification is known as the Item Distribution Table (IDT) and is in the form of a table that displays the distribution of the examination questions for a given subject according to topics to be tested and the cognitive level of the questions. The table of specification is prepared based on the content of the learning module. This ensures that the test items are representative of the content being covered in the module. Having a good distribution of questions that are representative of the whole module also helps ensure content validity (Jandaghi & Shaterian, 2008).

Another important dimension to be considered when building the table of specification is the distribution of items according to the different levels of cognition. The levels are based on Bloom’s Taxonomy, which states six levels of cognition: knowledge, comprehension, application, analysis, synthesis and evaluation, respectively. These six levels are clustered into three cognitive levels: Low (C1), medium (C2), and High (C3).

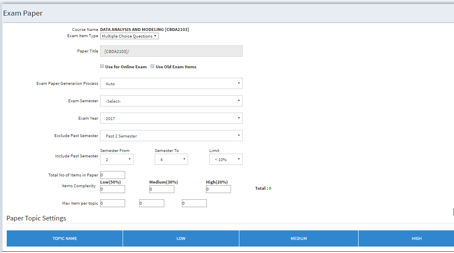

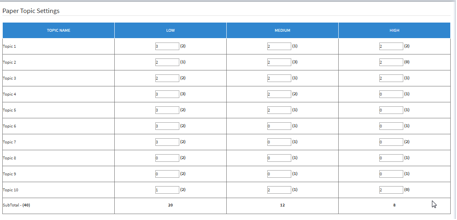

OUM QBank is designed to allow the generation of tables of specification based on user-set criteria. Figure 3 shows the item selection or filtering criteria, which includes:

Figure 3: Specification Criteria for Examination Paper Generation

Figure 3a: Table of Specification of the Examination Paper

iv) Test Paper Generation

To minimize laborious manual tasks, QBank has formatted all the examination templates into the system. Once a test-specification table is generated, the system will be able to generate the test paper according to the required print-ready format.

v) Item Analyses

After an examination, the examination results can be imported into the QBank system to enable item analyses. The difficulty index and discrimination index for each and every item can be generated. The discrimination index describes the extent to which a particular test item is able to differentiate the higher scoring students from the lower scoring students. The item difficulty index shows the total group answering the item correctly. This information serves as a reference for the user on the quality of items that have been developed and to make decisions about how each item is functioning. This then helps the faculty to identify poor items that need to be reviewed, enhanced or to be discarded.

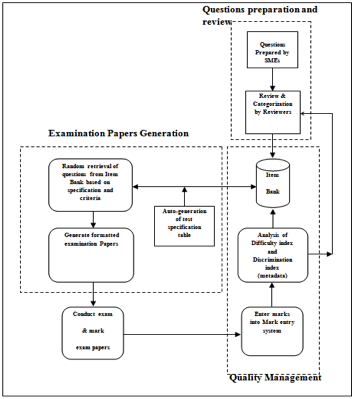

Figure 4 provides a visual representation of the OUM QBank system framework with relation to the complete process of item preparation, test generation, examination, item analyses and item review.

Stage1: Question Preparation and Review

Course subject matter experts (SMEs) are assigned to develop question items. The question items are reviewed by item moderators before the items are stored according to the topics and cognitive levels.

Stage 2: Examination Paper Generation

The user can specify the output of the examination paper based on the table of specification criteria selection. The question items are randomly selected from each cell based on the selected set of criteria. The examination paper can then be generated based on the pre-determined format that was set for each course.

Stage 3: Administration of Examination

The system allows online tests as well as generation of physical examination papers. However, physical examination papers can be in both ‘Open ended questions’ and ‘multiple-choice question’ format. The online test is only in multiple-choice question format.

Stage 4: Analysis of Quality of Items

Data from students’ responses are accessible through the Online Marks Entry System currently used at OUM for multiple-choice questions. The data is further analysed using the difficulty index and discriminating index. Based on the analysis, items are further enhanced or discarded from the item bank.

Figure 4: Framework for OUM’s Test Management System

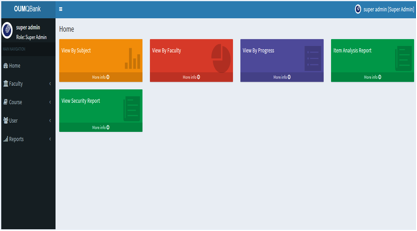

Figure 5 shows the login screen and Figure 6 shows the dashboard for QBank users, respectively. The functions that show will vary based on the different roles of the users logged into the QBank (super administrator, item entry operator, item entry reviewer, chief reviewer, faculty administrator, and the faculty dean). Functions for each role are clearly defined and each role will have a different level of security and access.

Figure 5: OUM QBank Login Screen

Figure 6: OUM QBank Dashboard

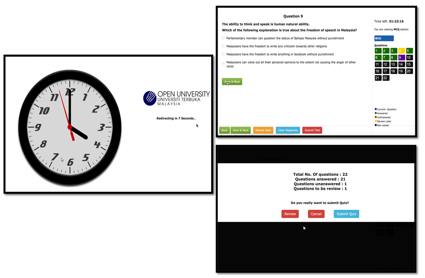

The Online Examination System is a unique sub-feature of QBank system, which needs to be highlighted. The features and framework described earlier are meant for ‘Offline’ delivery of an examination paper. This means that test papers generated from the system can be printed as hard-copy examination papers to be administered at various learning centers. The online component of the examination papers generation system is an extension of the Qbank main system. It allows for the test paper generated to be displayed online for the purpose of conducting the test in the online environment. To facilitate an Exam Paper created for ‘Online Exam’ delivery purposes, the Qbank system needs to include another ‘Online subsystem’ for students to login to take their examination at pre-scheduled dates and times. Figure 7 shows screenshots of the online testing system.

Figure 7: OUM Online Testing System

The Online Subsystem is designed to integrate with OUM’s campus student management system to perform the following two important functions:

Since the examination is taken online, both examination results and item analyses can be processed in real-time.

Security concern is one of the major considerations throughout the design of the system infrastructure and architecture, to ensure that data in the system is protected from unauthorized access, resulting in theft, loss, misuse or modification, as well as from attackers, hackers and crackers.

Various methods of user authentication were explored, including biometrics and facial recognition technologies. For cost effectiveness and efficiency, the familiar 2-factor authentication, the same as used by banks, is implemented for all users at all levels accessing the system, to protect their account with their password and personal mobile phone. Two-factor authentication can drastically reduce the probabilities of online identity theft, phishing expeditions, and other online fraud and, thus, provide security to the entire system. Figure 8 shows the dialog screen for entering the authentication code, which is sent to the user’s mobile phone.

Figure 8 Enter Authentication Code Screen

First, although the Qbank is capable of generating examination papers on demand to meet flexible examination times and locations, the system needs to have sufficient items deposited into the database. This is to avoid a high probability of repeated items.

Second, despite the commendable security measures taken to authenticate the registered candidate, it is still a challenge to prove the rightful candidate is actually answering the questions if the examination is taken from locations not monitored by OUM, for example in the candidate’s home.

Third but not least, the quality of items that are deposited into the item database is very much dependent on the subject matter expert producing quality items. The input into the Qbank will determine the output, in the form of the examination paper. The programmed item analysis indices are limited to multiple-choice-question format only. As such, the quality of open-ended questions is unlikely to be improvised and enhanced.

There is no doubt that the QBank system facilitates the assessment processes. The system stores items, provides table of specification for selecting items from the database, generates examination papers, analyses multiple-choice questions items and is able to deliver examination paper for online testing. It was possible to develop these functionalities due to a well designed system that includes system requirement analysis, user-interface design, functionality design and user-acceptance testing. Though there were limitations the OUM QBank system has been successfully implemented. It is hoped that with the implementation of the QBank system, assessment can be conducted not only in a more efficient and effective manner, but also in a more flexible way, paving the way towards the flexible entry and exit of learners.

Choppin, B. (1976, June). Developments in item banking. Paper presented at the First European Contact Workshop, Windsor, UK.

Estes, G. D. (Ed.). (1985). Examples of item banks to support local test development: Two case studies with reactions. Washington, DC: National Institute of Education.

Jandaghi, G., & Shaterian, F. ( 2008). Validity, reliability and difficulty indices for instructor-built exam questions. Journal of Applied Quantitative Methods, 3(2), 151-155. Retrieved from http:// files.eric.ed.gov/fulltext/EJ803060.pdf

Latchem, C., Özkul, A.E., Aydin, C.H., & Mutlu, M.E. (2006). The Open Education System, Anadolu University, Turkey: E-transformation in a mega-university. Open Learning: The Journal of Open, Distance and e-Learning, 21, 221-235.

Mutlu, M. E., Erorta, Ö. Ö., & Yılmaz, Ü. (2004, October). Efficiency of e-learning in open education. In First International Conference on “Innovations in Learning for the Future: e-Learning”, 26-27, October 2004, Istanbul.

Authors

Dr. Safiah Md Yusof is an Associate Professor and currently the Deputy Director for the Institute for Teaching and Learning Advancement (ITLA) at Open University Malaysia. She is also Head of the Online Learning Support Unit at ITLA, OUM. Email: safiah_mdyusof@oum.edu.my

Tick Meng Lim is a Professor and currently the Director for the Institute for Teaching and Learning Advancement (ITLA) at OUM. Email: limtm@oum.edu.my

Leo Png is currently the CEO of IMPAC Media Pte Ltd based in Singapore. Email: leo@impacmedia.sg

Dr. Zainuriyah Abd Khatab is currently a lecturer at OUM and Head of Assessment Support Unit for the Institute for Teaching and Learning Advancement at OUM. Email: zainuriyah@oum.edu.my

Dr. Harvinder Kaur Dharam Singh is currently a lecturer at OUM and the Head of Face-To-Face Support Unit for the Institute for Teaching and Learning Advancement at OUM. Email: harvinder@oum.edu.my